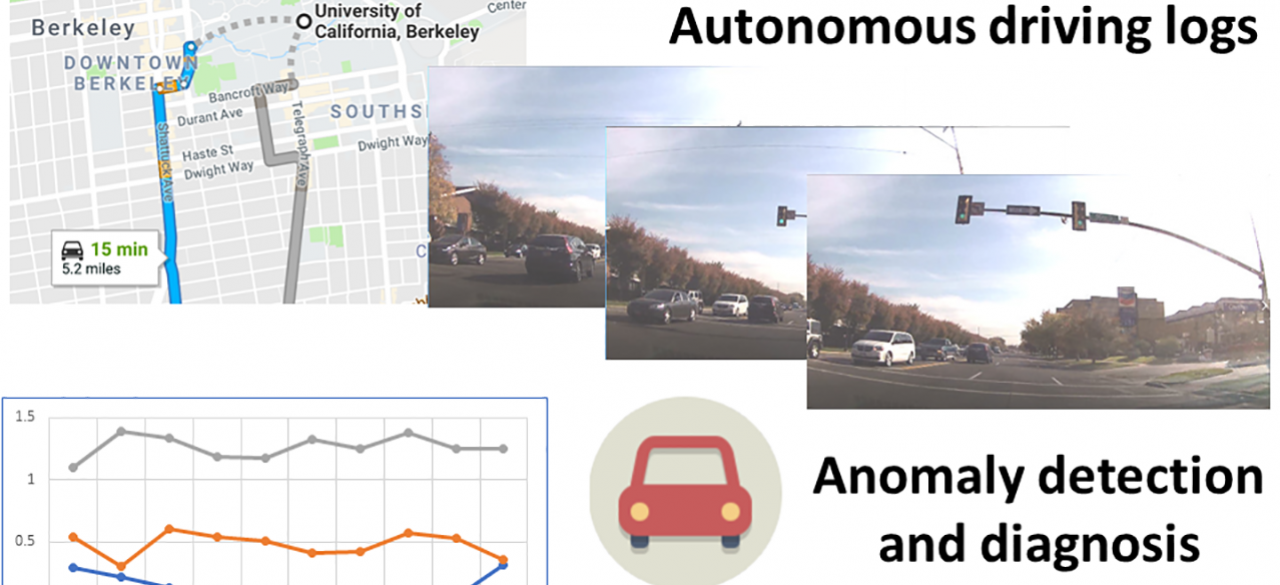

Detecting and Diagnosing Abnormal Behaviors from Autonomous Driving Logs

ABOUT THE PROJECT

At a glance

There has been a tremendous interest in developing autonomous driving systems to relieve human beings from disastrous accidents caused by driver fatigue, drunk driving, or inexperienced driver’s mis-operations. At the same time, however, such application scenarios also impose strong safety- and security-requirements to the autonomous driving systems. It is thus desirable that the system can have a second safety-ensuring sub-module besides the main driving functionality. In this proposal, we plan to develop techniques to detect and diagnose anomalies in system logs to provide further security guarantee to autonomous driving systems. In particular, we consider the situation where a car fully manages itself without any human intervention. Thus, this proposal is an augmentation of existing work which analyze the divergence between car predictions and human operations. We will leverage the existing infrastructure and data in Berkeley Deep Drive center [4], collect more log data, and deploy our proposed system in an autonomous car for evaluation in real world settings. Started with a broad categorization of autonomous driving system logs (Section 1), we first propose an effective logging practice (Section 2), and then propose solutions to use these logged data for anomaly detection (Section 3) and diagnosis (Section 4).

The PI and key personnel have extensive experience in adversarial machine learning and system log analysis. PI’s previous research include black-box attacks [1,5,14], and adversarial examples against different machine learning models [12,13,17]. The key personnel also have experience in traditional computer system log analysis [6–9], and have recently achieved significant improvements on system log anomaly detection through deep learning [9].

1 Logs in autonomous driving systems

An autonomous driving system relies on multiple sensor sources to provide streaming inputs. Based on the inputs, an intelligent engine can make predictive decisions to control a vehicle.The system thus can log both the input events and the predictions. Furthermore, an autonomous driving system typically has an operating system and multiple other controllers, which constantly produce system event logs. In particular, we consider three broad classes of log events.

Input event logs. We consider input events as the input from sensors. For example, in autonomous driving systems, there are cameras that continuously record surrounding environments while driving, GPS systems that track car locations in real-time, as well as LiDar and Radar that perceive the locations and distances of other objects relative to a car.

Prediction event logs. An autonomous driving system constantly makes decisions to navigate a car based on real-time environmental situations. Example decisions include how to control the direction; what speed should be maintained; and whether to change lane or not. Such predictive decisions should be recorded for online anomaly detection and subsequent analysis. We call such recorded decisions prediction event logs.

Traditional system logs. Note that an autonomous driving system can produce system logs as all other systems. For example, currently each BDD vehicle platform is installed with an operating system of Ubuntu 16.04 [4], thus system logs such as authentication logs, booting information and package installation logs would be valuable data source to analyze. There exist also other types of traditional vehicle sensor logs, such as fuel monitors or tire pressure monitors.

2 Effective logging for diagnosing autonomous driving systems

System logging practices have been studied for years [18, 19]. An effective logging practice needs to consider the level of details and the log size. In the scenario of autonomous driving, system needs to log information such as high-resolution videos taken from the camera, which require much larger storage than other types of log data. Therefore, a good logging practice becomes more crucial. In this proposal, we plan to explore the options for logging different types of events. Our design is motivated by two goals: (1) the logged information should be sufficient to detect various abnormal events in the autonomous driving vehicle; and (2) the logged information should be sufficient to diagnose the root cause of an anomaly.

History logs are queried to make online decisions, and for offline anomaly detection and diagnosis. Since we cannot store infinite system logs, we need to make a trade-off between query time and log size. Intuitively, later events should have more relevance to the current system status, and thus should be stored with more details for fast querying. In contrast, older events are less relevant to immediate decisions. As a result, more query time could be afforded, and fewer details are needed in them. Therefore, log size could be significantly reduced for older events. In addition to exploring the most effective compression method for each type of log data, a more interesting direction is to utilize machine learning techniques to remove history logs that are less interesting. Specifically, we will design algorithms to learn critical points of the time-series log data, and only keep detailed information around those points. For example, we could learn when the system experiences a novel setting, and keep detailed logs around that period while reducing others.

3 Anomaly detection in autonomous driving logs

The first use case of autonomous driving logs is anomaly detection. That is, detecting if the autonomous vehicle is making unusual behaviors which may have severe security implication.

Adversarial example detection using multiple types of inputs. Adversarial examples are carefully designed input data samples that could misguide machine learning models to generate incorrect outputs [10]. Such examples are known to be hard to detect [2]. To the best of our knowledge, all previous work on adversarial example detection focus on analyzing a single type of input data source [3,15]. In autonomous cars, we propose to leverage multiple data sources collected at the same time to detect possible adversarial examples. In addition to existing operation prediction mechanism, we will build multiple machine learning models that make predictions using different combinations of input data sources. If the predictions by these models are not consistent, an adversarial input may exist.

Control sequence anomaly detection. Control sequences are sequences of operational decisions by self-driving cars predicted from input data. We propose two approaches to detect anomalies in such sequences. The first one is to check the predictions through comparing with sensor readings. For example, an anomaly might have happened if the navigation control sequence does not follow the actual GPS reading data series. The second approach is to model such sequences using machine learning techniques. In particular, we assume that a pattern exists in vehicle control sequences. Thus, we could learn this pattern, and check if current prediction is probable based on history control sequence. We plan to use LSTM as a baseline to evaluate the feasibility of learning such sequences, and propose more advanced probabilistic models for effective anomaly detection.

Traditional system logs anomaly detection. Traditional system logs produced by the operating system and embedded systems running inside an autonomous car reveal system execution paths. For example, using an identifier to represent a log printing statement (LPS) in system source code, one system execution may go through a LPS sequence of “A A B D S” and generate corresponding system logs. Such sequence patterns resulted from normal executions could be learned, to detect unusual system behaviors shown as rare LPS sequences, e.g., system crashes. Previously we have achieved effective anomaly detection on traditional computer system logs using LSTM [9]. In this proposal, we plan to explore in this direction further. Specifically, inspired by the fact that a code block may contain multiple LPSs that will always be executed one after another, we propose to learn a segmental structure [16] from system log sequence, and use this segmentation information to improve anomaly detection. Moreover, we will incorporate domain knowledge and new log types brought by autonomous driving systems. We propose to analyze the correlations over time among system logs and other sensor readings. For one thing, such correlation enables us a deeper understanding of the system status. For another, different logs could have anomalies within the same time period. This could be caused by the same anomaly, or cascading failures started by one component failure. Such causality information could be reasoned through correlation analysis.

4 Automatic diagnosis under abnormal events

After an abnormal behavior has been detected, it is desirable to find the cause of the anomaly and finally resolve it. However, tracing back the reason behind a behavior is typically hard, especially due to the opacity of a deep neural network model. Given an abnormal output of the decision making model, our goal is to find out the reason why the abnormal decision is made. We plan to study different approaches to achieve this goal in order to simplify the diagnosis process along two directions.

Models with justification. Understanding the decision making procedure of a neural network model is hard. In the first direction, we plan to study how to revise the model to not only emit one final prediction, but also produce a justification to support the decision. For example, one possible way to instantiate this idea is to design a neural network that can make a chain of predictions so that later predictions are justified by the earlier ones in the chain. We call such a chain decision chain. For such an approach, training can be challenging if the decision chain is not provided in the ground truth. We are interested in exploring reinforcement learning-based approaches for training. We plan to explore the design space of both neural network architectures and the forms of justifications to find the best approach.

Decision interpreting models. The second approach is to design a decision interpreting model to interpret the decisions made by the decision making model. Consider the decision is d, and all input sources are i1, ..., in, which are used for the decision making model to produce the decision d. We can build another model f , which takes d and one input source ij (for j = 1, ..., n), such that f (d, ij) produces a score sj [0, 1] indicating the likelihood of the input source ij being the cause of the decision d. We call f the decision interpreting model, and we can use it to understand the influence of each input to the final decision without even opening the black-box of the decision making process. Note that the decision interpreting model is relevant to the influence function [11] that has been well-studied in the machine learning literature. An additional benefit over influence function is that the decision interpreting model can also be trained to interpret the decisions made by non-neural network components of the systems.

| PRINCIPAL INVESTIGATORS | RESEARCHERS | THEMES |

|---|---|---|

| Dawn Song | Chang Liu | Autonomous Driving Logs, Anomaly Detection, Automatic Diagnosis |