Imitative Models: Learning Flexible Driving Models from Human Data

ABOUT THE PROJECT

At a glance

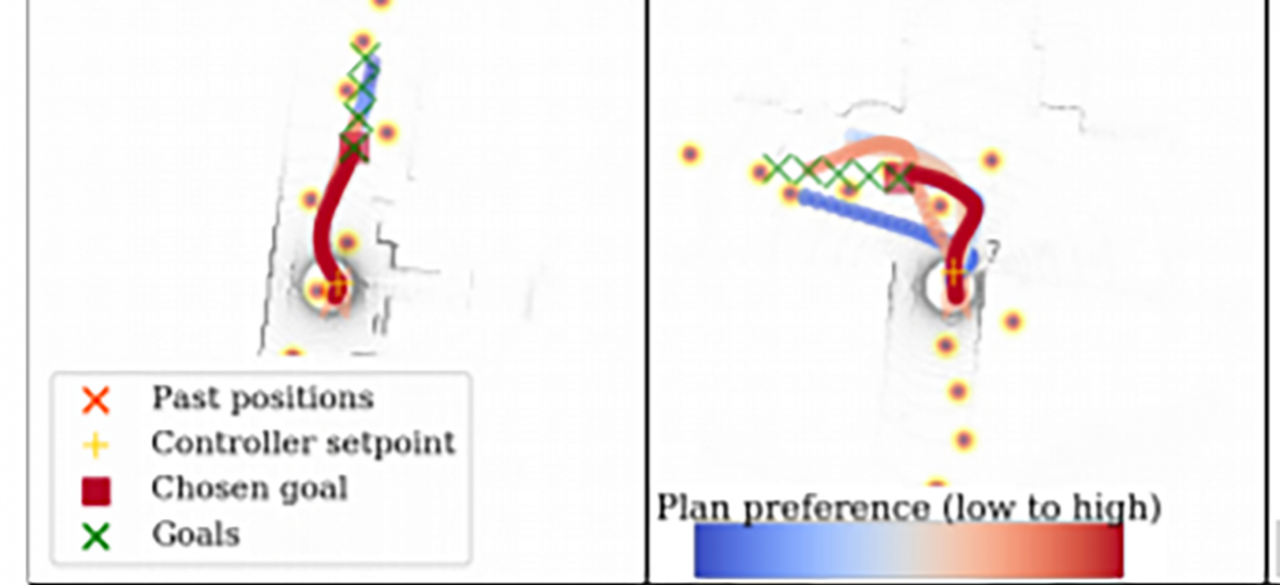

Imitation learning is a powerful tool for learning robotic control policies, and has recently been applied in the context of autonomous driving to train policies for steering an autonomous vehicle end-to-end. However, pure imitation learning in an autonomous driving setting is very limiting. Although an end-to-end trained imitation policy can convert raw observations directly into actions, such a policy is inflexible: it is difficult to incorporate constraints, planning, and safety mechanisms into such a policy, generally difficult to integrate it into existing control pipelines, and even to compose it with other learned components and policies. On the other hand, model-based reinforcement learning offers an appealing alternative: learned models can be used as part of a conventional planning algorithm, where they can be integrated with hand-specified safety and comfort cost terms, and can be integrated much more readily into existing control pipelines. However, model-based reinforcement learning methods suffer from a major shortcoming due to distributional shift: a model trained on only “good” data of safe and correct driving (as might be obtained from a human driver) will not necessarily make reasonable predictions about potential adverse events. In order to “understand” these adverse events, the model must be provided with ample data of such events occurring. In robotics, this typically requires on-policy data collection, something that can be disastrously dangerous for autonomous vehicles. Can we devise algorithms that combine the best of both worlds -- the ability of imitation learning to learn entirely from safe, off-policy data of human drivers, and the flexibility and composability of model-based reinforcement learning algorithms?

| principal investigators | researchers | themes |

|---|---|---|

| Sergey Levine | Imitation Learning, Model-based Reinforcement Learning, Probabilistic Models, Deep Learning |