Adversarially Robust Visual Understanding

ABOUT THE PROJECT

At a glance

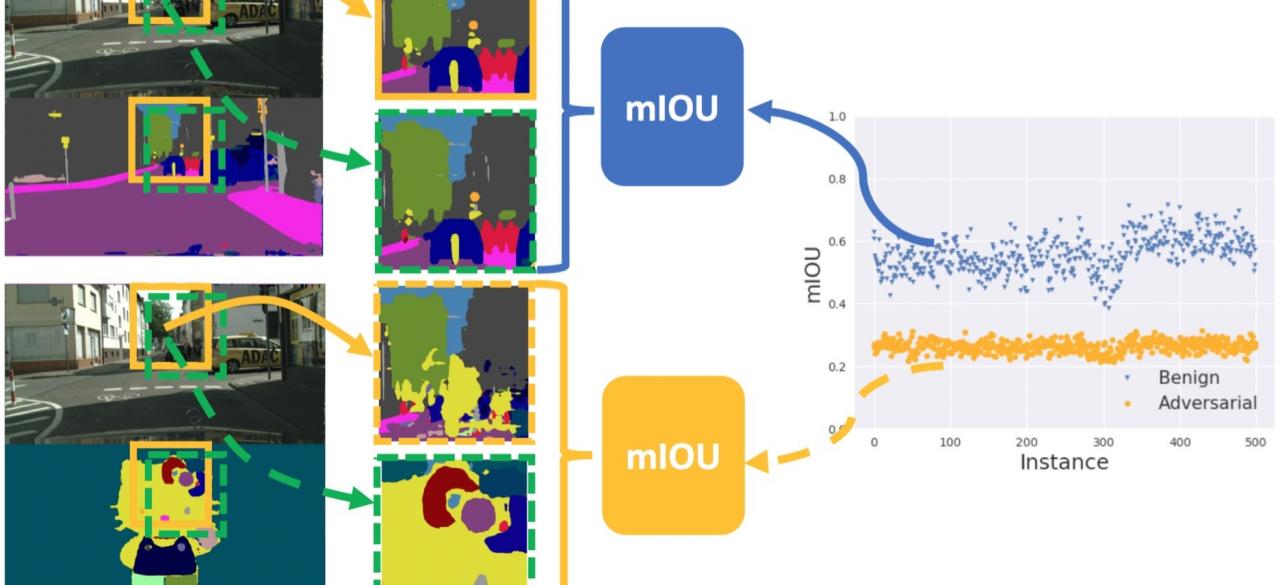

Machine learning, especially deep neural networks (DNNs), have achieved state-of-the-art performance across various application areas. For instance, the vision system of a self-driving car can take advantage of DNNs to better identify road signs, pedestrians, and vehicles, and make informed driving decisions. However, recent research has shown that DNNs lack robustness to adversarial examples, which look almost identical to legitimate data points for a human but can mislead DNNs to make incorrect predictions. For instance, PI’s recent research has demonstrated that adversarially perturbed road signs, say, STOP sign, could mislead the perceptual systems of a self-driving car to misclassify them into other speed limit signs with catastrophic consequences. Such adversarial examples raise serious security and safety concerns about deploying DNNs into real-world applications. Existing defenses proposed to mitigate adversarial examples are mostly customized to specific attacks, failing to adapt to changes in the attack strategies. Hence, it is important to understand the security of deep learning in the presence of attackers and develop defense strategies against adaptive attacks.In this proposal, we propose various techniques to gain more comprehensive understanding about adversarial examples. A rigorous explanation for adversarial examples will facilitate the design of provably robust DNNs, thus ceasing the arm race between defenders and attackers. In addition, we also propose to detect adversarial examples for different vision tasks, such as video classification, object detection and 3D object rendering, based on spatial and temporal consistency information.

| PRINCIPAL INVESTIGATORS | RESEARCHERS | THEMES |

|---|---|---|

| Dawn Song | adversarial examples, secure machine learning, attacks and defenses |