Pixel-Level Confidence Prediction for Interpretable Network-Based Driving (ConfPix)

ABOUT THE PROJECT

At a glance

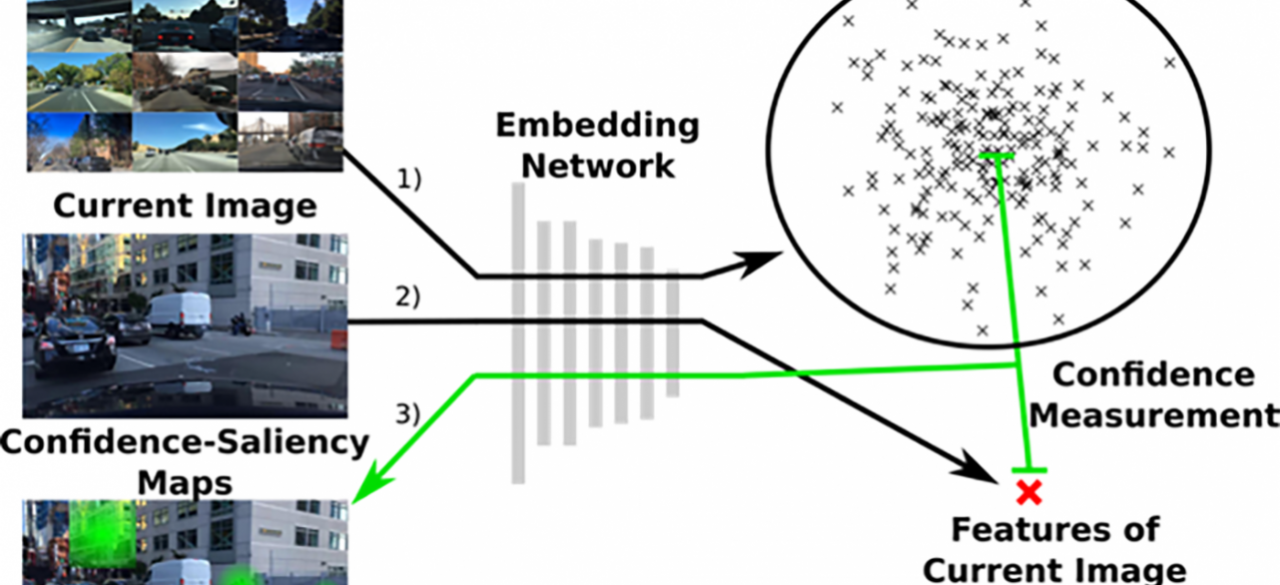

We propose a confidence estimation framework based on pixel-level confidence predictions. Estimations of a network’s performance on a task will be realized by tracing structures in the camera input to a calculated measure of confidence. This extends our work on confidence estimation in several ways: 1) For identifying structures, responsible for deterioration of the confidence rating, 2) For discerning and measuring similarity in between simulated and real images, and 3) to provide new ways of testing the robustness of network-based driving functions.

Background and Motivation: For risk and safety evaluations of neural network-based driving functions, it is highly desirable that these systems are understandable and interpretable. Existing solutions for image segmentation, object detection or control signal regression do not support these requirements. In the first phase of our BDD project that addresses such needs, we developed a confidence measure which is agnostic of a concrete network and task. By evaluating the relationship of test- to-training-data, we derived a measure of visual similarity of a novel camera-image to the distribution of training data. The confidence in the output of a network can now be calculated by parsing the same images through a confidence-calculating network at the same time. Our assumption is that visually similar images lead to similar network performance, when two networks are trained on the same data. Based on this concept of confidence measure, further questions should be addressed on how network performance and the training process can be improved, by exploiting information from this similarity measure.

Research Goal: Pixel-Level Confidence Prediction. Our current method evaluates the confidence of one or several camera images at once. Whether a test situation is similar to training situations is answered with a single score. We propose to extend the concept of a single image scoring to a pixel-wise confidence map, similar to Bojarski et. al. (2017), where each pixel is rated according to its contribution to the score. By investigating pixel-wise predictions, detailed structures in the input can be traced to their effect based on the confidence. Furthermore, modifications of those structures can be used to push an image further away or closer to the training distribution: If damaged lane markings are identified as dominant features that lower the confidence score, masking out these lane markings can create diverse data to stress-test the original network under investigation while staying close to real data.

| principal investigators | researchers | themes |

|---|---|---|

| Ching-Yao Chan Stella Yu | Sascha Hornauer | confidence estimation, driving safety, unsupervised learning, metric learning, domain gap, data perturbation |