Data Efficiency for Point Cloud Perception Model

ABOUT THIS PROJECT

At a glance

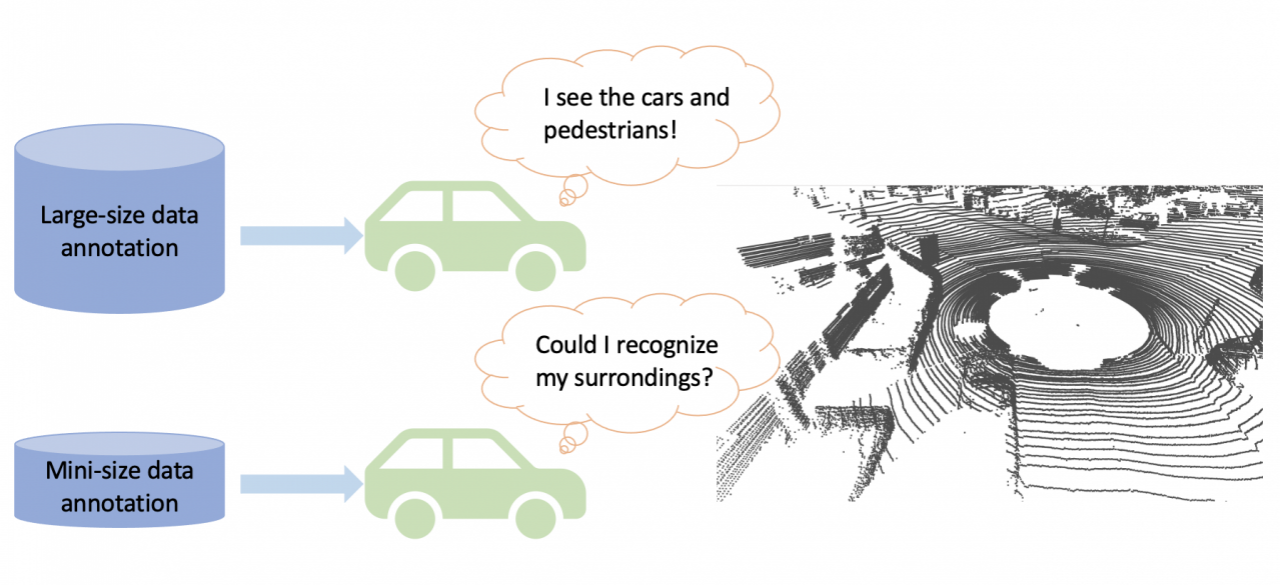

Lidar is a standard sensor for current generations of robotaxis. The preference for Lidar is due to its accuracy in depth estimation and the completeness of its capture of spatial structure. With an ever growing variety of new and less expensive Lidar sensors, it seems that Lidar will soon be available in passenger cars. However, annotating LiDAR point clouds is a challenging task, and this is a bottleneck for broad adoption. Compared to 2D image annotation, it is more expensive to label the point-cloud data since it is sparse, unorganized, and there are massive amounts of data to be annotated. This motivates us to study data-efficient deep learning algorithms for point-cloud perception and to reduce the requirements for data annotation to train deep learning models. Recent work (SimCLR [1]) in image-based computer vision has shown that it is possible to use 10x fewer annotations to train a neural network to reach the same accuracy as full-supervision. Therefore, we here propose to design the training strategy customized to the point cloud processing model to achieve closed full-supervised performance with the minimum amount of annotated data.

In particular, to reduce the labor of data annotation, there is a lot of research in the field of 2D image perception, such as domain adaptation [5,8], self-supervised learning, and semi-supervised learning etc. Different from the previous work that attempts to save the annotation via unsupervised domain-adaptation, we propose to acquire the data efficiency via self-supervised and semi-supervised training strategies which has been demonstrated to be reliable on the 2D image domain [1,7]. Applying these strategies Lidar will be novel and valuable to both industry and academia.

| Principal investigators | researchers | themes |

|---|---|---|

Wei Zhan Chenfeng Xu | point cloud perception, data efficiency, self-/semi-supervised learning |