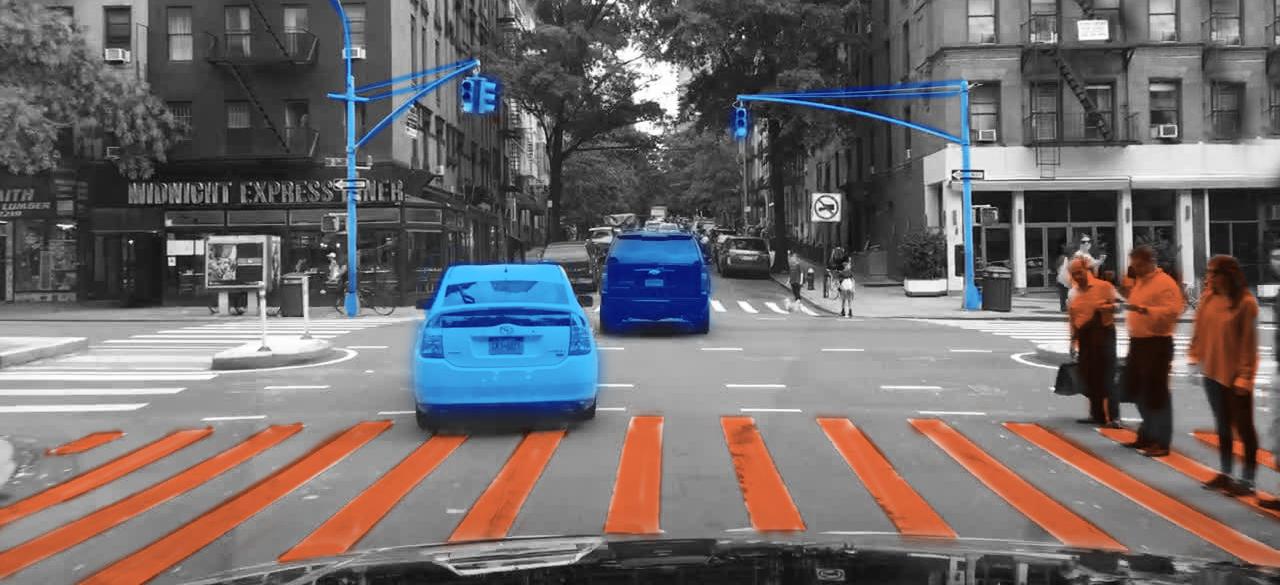

Adversarially Robust Perception for Autonomous Driving

ABOUT THIS PROJECT

At a glance

Current deep learning classifiers are not robust and are susceptible to adversarial examples that could be used to fool the classifier and manipulate autonomous vehicles. We will study how to make deep learning classifiers robust against these attacks. We will study three specific approaches for defending machine learning: generative models; improvements to adversarial training; and systems-level consistency checks.

| Principal Investigators | Researchers | themes |

|---|---|---|

| David Wagner | adversarial examples, robustness, security |