Best of both Worlds: Combining Classical Planning with Learning for Long-horizon Tasks

ABOUT THIS PROJECT

At a glance

Reinforcement and imitation learning have quite successfully allowed agents to act from raw, high-dimensional input such as camera imagery for individual tasks [1]. However, real-world sensorimotor agents must solve an array of user-specified tasks, such a self-driving vehicle that navigates to many destinations or a robotic arm that can assemble multiple structures. While learning based approaches have enabled agents to be conditioned on and achieve nearby goals [2, 3], this success has been limited to short horizon scenarios. Scaling these methods to distant goals remains extremely challenging. On the other hand, classical planning algorithms have enjoyed great success in long-horizon tasks by reducing them to graph search. For instance, A* was successfully used to control Shakey the Robot for real-world navigation over five decades ago [4]. Unfortunately, long-range planning algorithms operate on graphs abstracted away from the raw sensory data via domain-specific priors, and assume perfect controllers to traverse the edges.

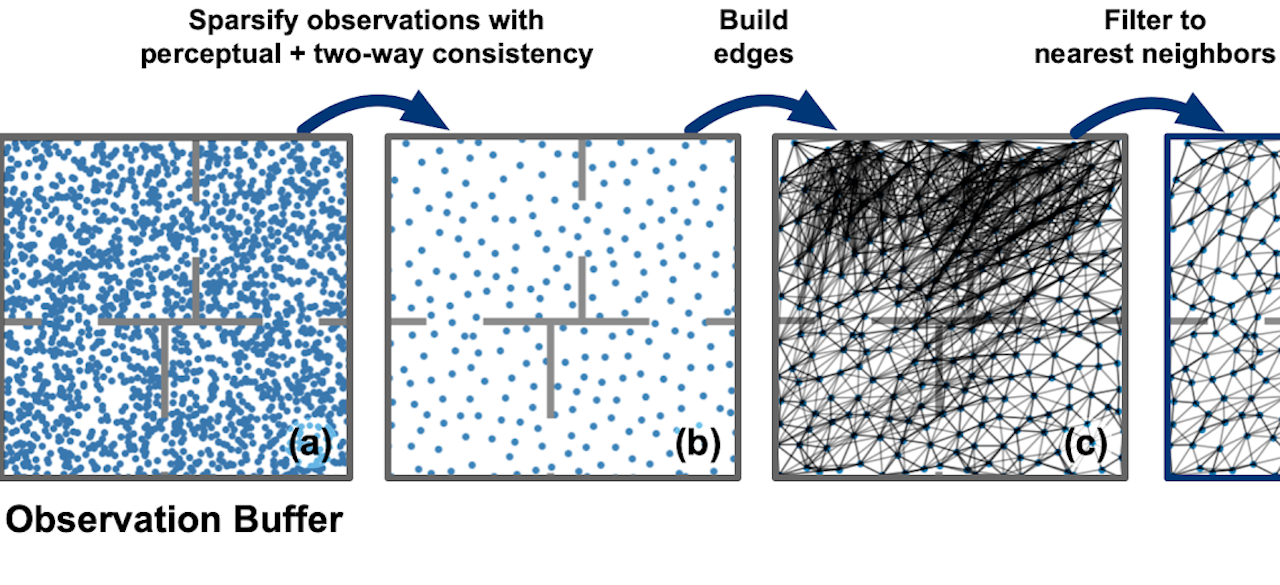

How can we have the best of both worlds? In this research, we will combine the long-horizon ability of classic graph-planning with the flexibility of modern parametric learning-driven control.

| principal investigators | researchers | themes |

|---|---|---|

| Pieter Abbeel |