Clockwork FCNs for Fast Video Processing

ABOUT THE PROJECT

At a glance

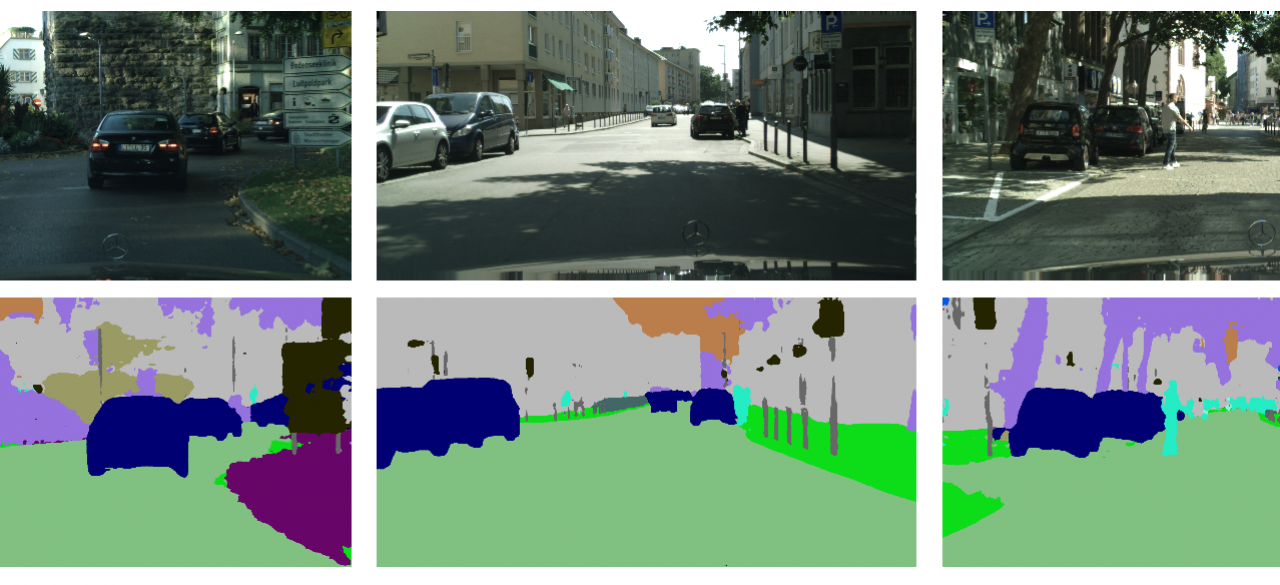

Video-rate semantic segmentation with contemporary convolutional networks requires considerable computational resources and suffers degraded performance from the image-blur that results from rapidly moving cameras or objects. This project will investigate complementary innovations to effect fast and accurate labeling of blur-free videos of dynamic scenes. While pixels may change rapidly from frame to frame, the high-level semantic content of a scene is more stationary. The team will use a novel, frame-asynchronous, “clockwork” convnet, which pipelines processing over time with different network update rates at different levels of the semantic processing hierarchy, significantly reducing frame processing time with little or no degradation in performance. The team’s proposed approach will use learned spatial semantic transitions to reduce redundant processing to improve the overall recognition performance. Further, the team will address the problem of motion blur by leveraging an overlooked feature of laser-based projector-camera systems: individual pixels of a scene are individually laser-illuminated so briefly that blur is nearly non-existent. It has been observed that an unmodified low-power pico-projector suffices as an indoor illumination source to yield blur free video with a consumer-grade CMOS camera. The clockwork FCN provides faster and accurate semantic segmentation of video while the combination of network and projector-camera hardware opens up the possibility of processing videos with extreme motion as captured on assembly lines or by flying drone.

The team’s new approach is the first reported design for blur-free imaging despite extreme motion through the use of a laser-projector-camera system.

The team’s new approach is the first reported design for blur-free imaging despite extreme motion through the use of a laser-projector-camera system.

| principal investigators | researchers | themes |

|---|---|---|

| Trevor Darrell | J. Hoffman Kate Rakelly E. Shelhamer | Semantic Segmentation Convolutional Networks |