Computationally Efficient Option-based Monte Carlo Planning for POMDP

ABOUT THIS PROJECT

At a glance

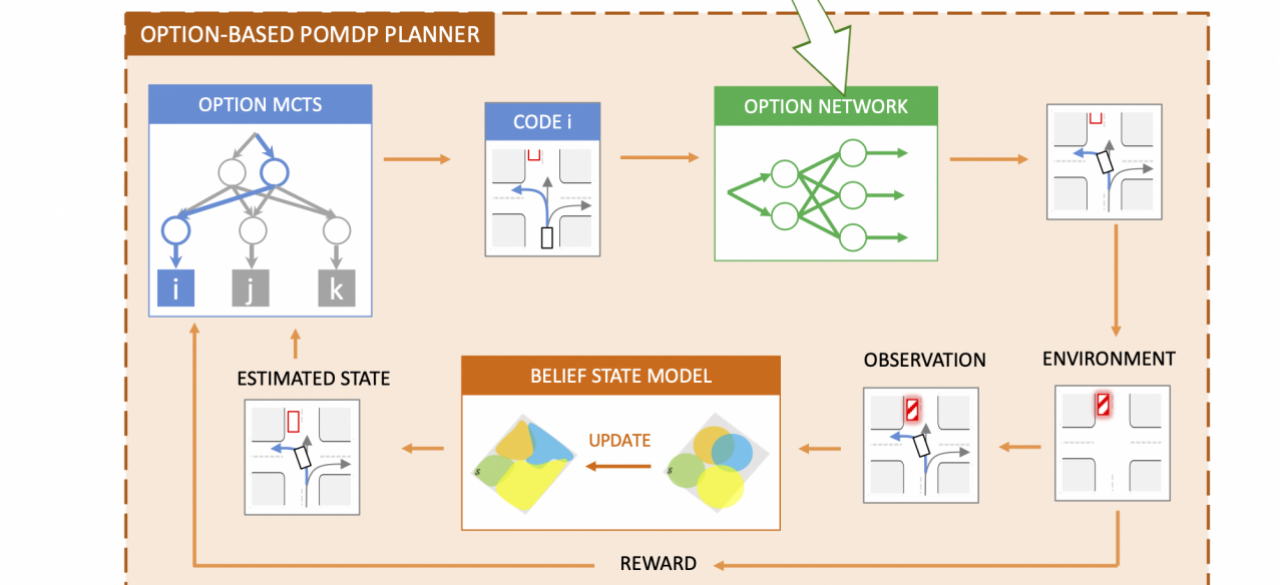

Monte Carlo Tree Search (MCTS)-based planning approaches have achieved great success in solving complex Markov Decision Process (MDP) problems and games [1-2]. However, it is limited to problems with discrete action space. While discretization can be applied to convert a continuous action space into a discrete one, the search space could be significantly complex for problems with high-dimensional continuous action space. It prevents the applications of MCTS-based planning methods in challenging robotics and autonomous driving tasks which require real-time computation. In this project, we aim to develop a computationally efficient MCTS-based planning algorithm for practical autonomous driving motion planning problems in challenging traffic scenarios, where the problems are abstracted as partially observable MDPs (POMDPs) with continuous and high-dimensional state and action spaces. The core idea is to transform the original POMDP problem into a hierarchical one via the introduction of options [3]. Options are high-level discrete actions representing different skills (e.g., turning, overtaking, etc.), which can take the forms of temporally extended action sequences or prefixed control policies. Instead of searching over the original action space, the MCTS-based planner is used to search options, which significantly reduce the search space and computational complexity. Furthermore, we want to combine MCTS-based planning with option-critic reinforcement learning (RL) [3]. Specifically, we will introduce a high-level termination policy determining whether the current option should be terminated. MCTS will only be used to search new options when the current option is terminated. By doing so, we can further prune the search tree and significantly accelerate the planning procedure. We want to demonstrate that the proposed method is capable of efficiently solving challenging driving tasks which are impossible to be solved by conventional MCTS-based planning approaches.

| Principal investigators | researchers | themes |

|---|---|---|

| Chen Tang | Monte Carlo tree search, POMDP, Efficient computing, Interactive planning |