Data Augmentation via Synthetic Point Cloud for 3D Detection Refinement and Domain Adaptation with Different LiDAR Configurations

ABOUT THE PROJECT

At a glance

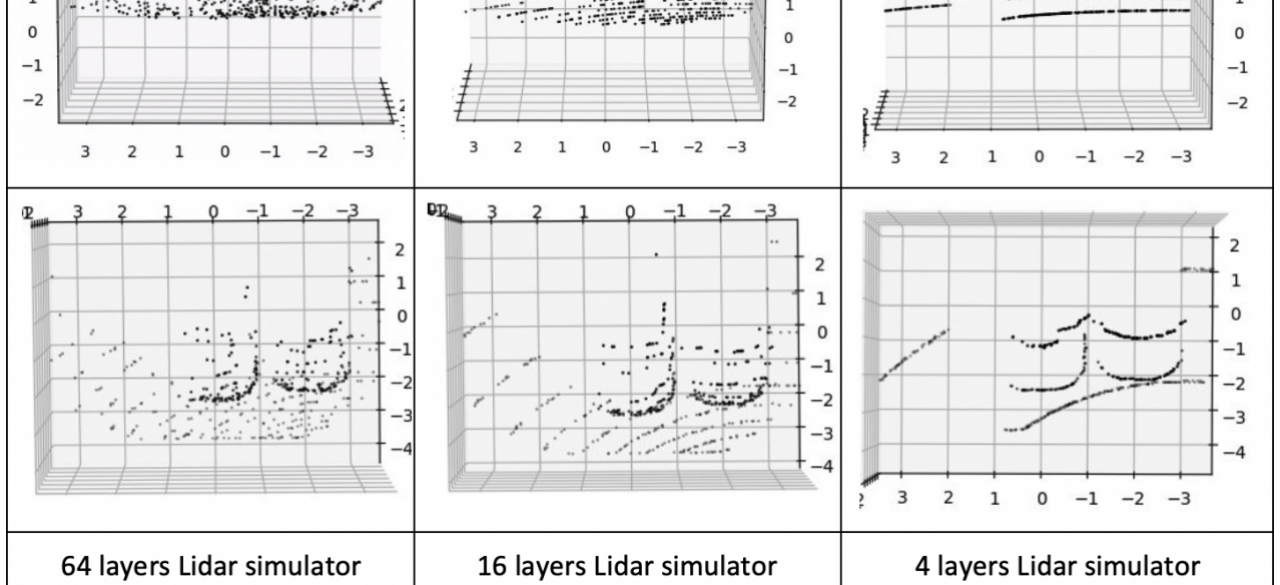

Although lots of state-of-the-art 3D detection networks have been proposed and tested on KITTI benchmark, the performance becomes saturated at around 76% mAP, regardless of sensors usage (with camera or not), network structure or training techniques. Moreover, when pretrained 3D detection networks with desirable performance on KITTI are applied to the point cloud data collected from a 64-layer LiDAR (same as KITTI) with subtle differences on configurations (vertical angles of each beam), the performance will degrade even significantly sometimes. With absolutely different configurations (64 or 32 or 16 laser beams allocated at different positions), it will be much harder to adapt for 3D detectors.

Data augmentation via synthetic point cloud is a promising solution for the aforementioned problems. In another proposal to BDD this year, we propose to conduct a comprehensive failure analysis to the 3D detectors. With the failure cases obtained for a specific detector, augmented data can be generated accordingly to enhance its performance so that the bottleneck can be broke through. Also, data augmentation for LiDAR sensors with different configuration can significantly improve the capability of the detectors for domain adaptation. In this project, we propose to construct a systematic data augmentation framework according to the failure analysis. We will also design novel methods to generate synthetic point cloud for arbitrary LiDAR configurations with 3D CAD models of the objects and dense point cloud map for static scenes. Finally, we will propose new structures of 3D detectors with enhanced performance for both single-frame and video-frame 3D detection, as well as desirable domain adaptation capability by utilizing the augmented data.

| principal investigators | researchers | themes |

|---|---|---|

| Masayoshi Tomizuka | Wei Zhan | domain adaptation, 3D detection, synthetic point cloud |