Deep Learning-Based Vehicle Control Strategist for Autonomous Vehicles

ABOUT THE PROJECT

At a glance

Background and Motivation

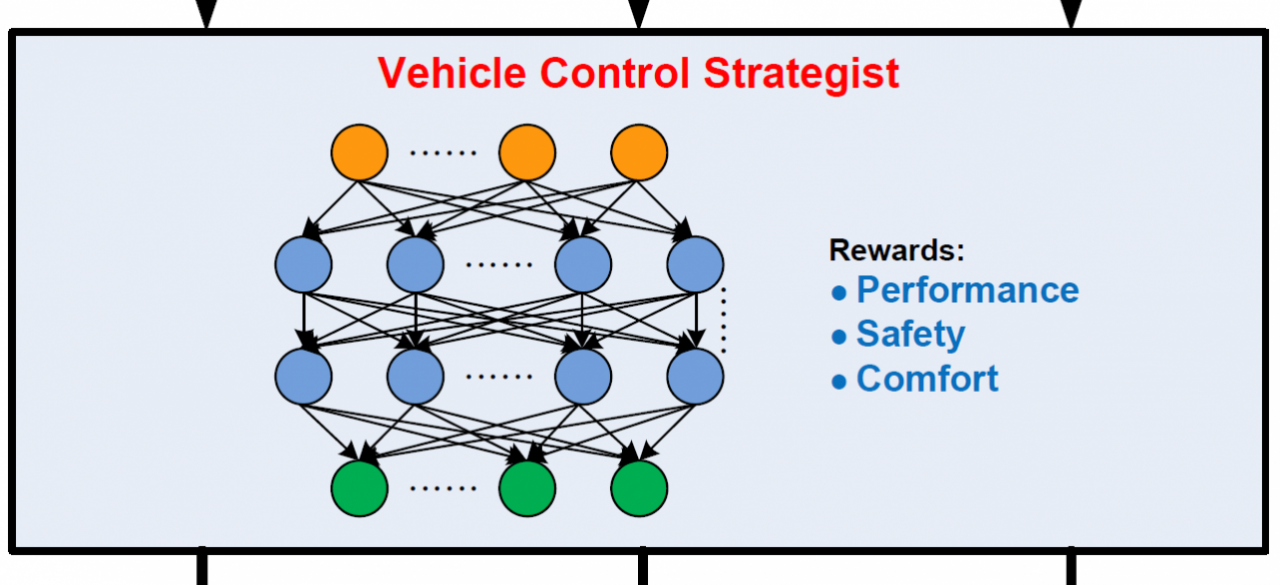

Inspired by recent progresses in deep reinforcement learning, we intend to build a mid-level control algorithm called “Vehicle Control Strategist” (VCS) through the adoption of deep reinforcement learning. The structure of VCS is

shown in the adjacent figure. The VCS receives inputs from motion planning, vehicle states, and environment perception, then it utilizes a neural network (NN) to map the inputs to the control

commands for steering, accelerating/braking, and traction distributing to the low-level controllers. The NN is designed and trained to achieve the desired performance based on a reward function

that seeks to maximize driving performance, safety, and ride comfort. The NN model implicitly handles the coupling of longitudinal dynamics and lateral dynamics of the vehicle to derive integrated control policy to optimize overall performance.

Research Goal and Approach

We propose to develop a mid-level control strategist for control of AVs using deep reinforcement learning algorithms. The strategist will be able to adapt to a variety of driving environments and vehicle dynamics. The algorithm can update its control strategy according to its previous driving experience to improve driving performance.

In this project, we will use Deep Q-network (DQN) as the basis of a multi-layered neural network that is tailored for the development of the VCS. The input states of the DQN will include motion planning, vehicle state, and driving environment. The output actions will be control commands to lower-level control units such as torque vectoring of motors at individual wheels, steering wheel, throttle, and brake. The VCS will consider driving performance metrics, including safety and comfort, as its reward and learn how to coordinate integrated longitudinal and lateral control.

The proposed VCS algorithm will be developed based on a simulation environment, and experiments will be

conducted to verify the feasibility of the developed algorithms. The research plan includes the following tasks:

- Establish a simulation environment

o Establish a driving environment model and a vehicle model

o Consider various driving conditions such as slippery roads and emergency maneuvers as test cases

o Generate various required trajectory for the training process

Create VCS model using Deep Q-Network (DQN)

o Design NN structure and the reward function

o Train the VCS model with various driving scenarios and tune various hyper parameters

- Comparative analysis of VCS performance:

o Compare performance of VCS and Model Prediction Control-based or other conventional controllers

o Evaluate performance metrics of VCS and other conventional controllers

- Implementation on an experimental vehicle platform (optional second phase with a participating OEM)

Research Team

The team members: (a) Co-Principal Investigators: Drs. Ching-Yao Chan of California PATH, and Prof. Masayoshi

| principal investigators | researchers | themes |

|---|---|---|

| Ching-Yao Chan Masayoshi Tomizuka | Deep Learning, Vehicle Control, Automated Vehicle |