Deep Reinforcement Learning

At a glance

Deep Reinforcement Learning for Tracking

In preliminary work, the team studied if it is possible to train a neural network to track generic objects by watching videos of objects moving in the world. To achieve this goal, the team introduced GOTURN, Generic Object Tracking Using Regression Networks. By watching many videos of moving objects, the team’s new tracker learns the relationship between appearance and motion that allows it to track new objects at test time. This tracker thus establishes a new framework for tracking in which the relationship between appearance and motion is learned offline in a generic manner.

While the preliminary work resulted in state-of-the-art generic object tracking capabilities, several limitations remain, and are now being improved upon in this project. For example, the tracker is currently unable to track objects under large occlusions. The goal of this project is to extend the previous work to recover objects that have been occluded. Using reinforcement learning, the tracker will learn a search strategy for finding an object that was previously occluded, after it becomes unoccluded and reappears somewhere in the image. This will address a fundamental limitation with the previous approach and should lead to a large improvement in performance. Handling occlusions is also critical for tracking in most real-world applications.

Other extensions that we hope to explore include:

- Unsupervised learning to track

- Multistage learning for tracking

- Multi-hypothesis prediction

- Combining low-level with high-level visual information

![]()

Figure 1. Using a collection of videos and images with bounding box labels (but no class information), we train a neural network to track generic objects. Our network observes how objects move and outputs the resulting object motion, which is used for tracking.

| Principal investigators | researchers | themes |

|---|---|---|

| Pieter Abbeel | David Held | Deep Learning Autonomous Vehicles |

Benchmarking Deep Reinforcement Learning

Although deep reinforcement learning (RL) has started to have its share of success stories, it has proven difficult to quantify progress within the field itself, especially in the domain of continuous control tasks, which is typical in robotics. To enable transparency about what constitutes the state-of-the-art in Deep RL, the team is working to establish a benchmark for deep reinforcement learning.

This benchmark will consist of two components:

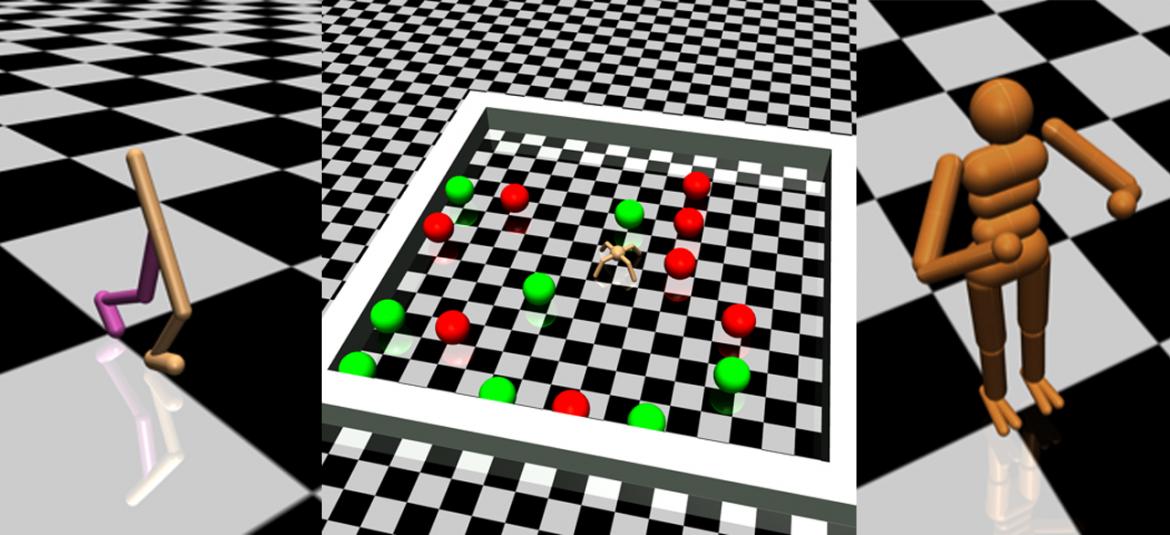

1. A rich set of simulated robotic control tasks (including driving tasks) in an easy-to-deploy form. Four categories of tasks will be included in this benchmark: (a) Basic tasks; (b) Locomotion / Driving tasks; (c) Tasks that require perception and/or memory; and (d) Tasks that require hierarchical decision-making.

2. Reference implementations of all prominent existing reinforcement learning algorithms, and an extensive evaluation of these algorithms on the benchmark. This will include an architecture for running distributed experiments (on Amazon EC2 and/or Google’s Computer Engine) that will allow for extensive, automatic hyper-parameter tuning, which is important for thorough and fair evaluations. Algorithms that will be included are: REINFORCE, Natural Policy Gradient (NPG), Trust Region Policy Optimization (TRPO), Proximal Policy Optimization (PPO), Deterministic Policy Gradient (DPG), Reward-Weighted Regression (RWR), and Relative Entropy Policy Search (REPS).

Figure 2. Illustrations of tasks implemented in the benchmark. Left: Walker. Middle: AntGather. Right: Humanoid.

| PRINCIPAL INVESTIGATORS | RESEARCHERS | THEMES |

|---|---|---|

| Pieter Abbeel | Rocky Duan | Deep Learning Benchmarks |