Deep RL with Dexterous Hands and Tactile Sensing

ABOUT THE PROJECT

At a glance

In performing a range of complex and delicate manipulation tasks, humans make use of a complex interconnected system of sensing and actuation in their hands. Although no single component of this system is particularly precise, the redundancy and intelligent control of the human hand allows us to perform manipulation behaviors that remain far outside the capabilities of even our most advanced robots.

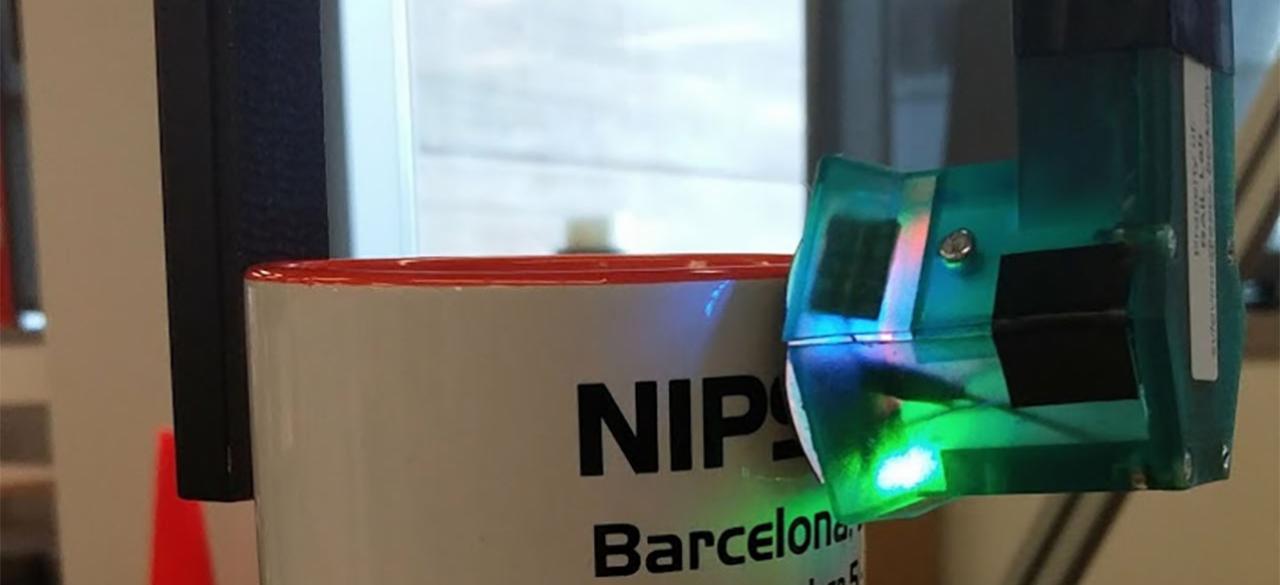

The focus of this project will be on developing deep reinforcement learning (deep RL) algorithms for controlling dexterous hands and for processing rich tactile input from tactile sensors. To that end, we plan to equip our Sawyer robotic platform with a tactile sensor, and eventually a more dexterous multi-fingered hand, and devise hierarchical deep RL methods that can learn to perform complex and delicate manipulation skills through a combination of Bayesian optimization and model-based learning.

During our research, we will use one or more Sawyer robot arms as the basis of our experimental validation. We will equip the Sawyer robot with tactile sensing and, once the tactile sensing experiments are successful, we will explore more dexterous hands. For tactile sensing, we are considering a number of sensors, including the BioTac sensor that has previously been used at UC Berkeley. We will develop novel convolutional neural network architectures for processing rich tactile signals from sensors such as the GelSight sensor. Convolutional networks are well suited to data that is structured along multidimensional arrays, such as images. Readings from tactile arrays such as the GelSight sensor are arranged into a 2D structure, making convolutional networks a natural starting point.| principal investigators | researchers | themes |

|---|---|---|

| Sergey Levine | Reinforcement Learning Algorithms |