Design Space Exploration for Deep Neural Nets for Advanced Driver Assistance Systems

ABOUT THE PROJECT

At a glance

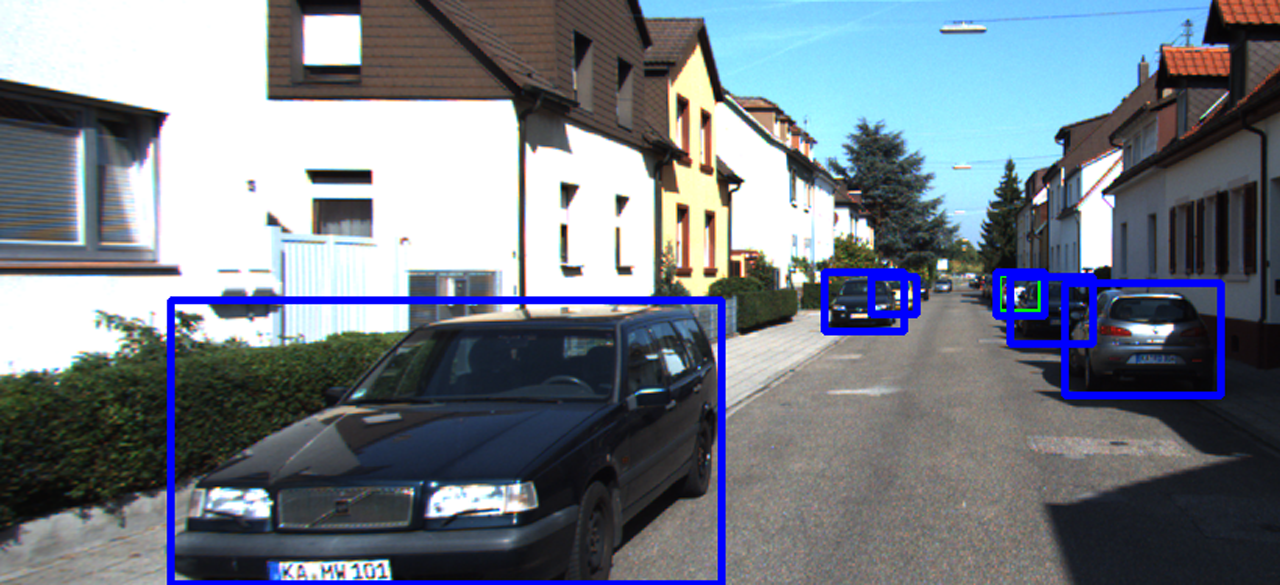

In recent times, Deep Neural Nets (DNN) have shown the highest accuracy in a number of machine learning/computer vision challenges. Specifically relevant to Advanced Driver Assistance Systems (ADAS) applications is the dramatic increase in accuracy of image object detection and localization. At all levels of automation (i.e. NHTSA Levels 1-4), ADAS applications require multi-task DNNs that are able to detect lanes, cars, pedestrians, road signs, etc. Given a training data set, natural questions arise: What DNN architecture (convolutional, fully connected, LSTM etc.) is the best for a given task? What network topology, optimization method, and hyper parameters are best suited for the problem? Answering these questions will first require system development effort in multiple directions.

Moreover, ADAS applications bring new opportunities as well as challenges to the DNN-train/DNN-deploy cycle that are not typically addressed in academic research. First, autonomous vehicles with six or more cameras and other sensors have the potential to create an unprecedented amount of training data. Second, this training data must be gathered, wirelessly, from diverse autonomous vehicles. Third, despite the high volumes of data, the DNN training must be done very quickly. For example, training data associated with obstacle avoidance (i.e. accident prevention) should be integrated and broadcast to autonomous vehicles as quickly as possible. In both formal and informal discussions with major companies and agencies involved with autonomous vehicles, the team understands there are many diverse opinions regarding the need for continuous training updates, nevertheless, the team believes that by addressing the most challenging scenario of continuous training, the requirements of other less demanding training scenarios can also be met.

With this background, the team will:

1. Perform Design-Space Exploration for DNN architectures/models, and hyper-parameters that are best suited for real world ADAS applications;

2. Perform Design-Space Exploration for DNN architectures that have a reduced number of weights. Fewer weights will results in DNN architectures that are faster to train (B2 above) and minimize communication requirements from client to cloud (B3 above);

3. Investigate weight/data compression techniques that further reduce the data required to represent weights; and

4. Investigate client/cloud computational trade-offs.

Moreover, ADAS applications bring new opportunities as well as challenges to the DNN-train/DNN-deploy cycle that are not typically addressed in academic research. First, autonomous vehicles with six or more cameras and other sensors have the potential to create an unprecedented amount of training data. Second, this training data must be gathered, wirelessly, from diverse autonomous vehicles. Third, despite the high volumes of data, the DNN training must be done very quickly. For example, training data associated with obstacle avoidance (i.e. accident prevention) should be integrated and broadcast to autonomous vehicles as quickly as possible. In both formal and informal discussions with major companies and agencies involved with autonomous vehicles, the team understands there are many diverse opinions regarding the need for continuous training updates, nevertheless, the team believes that by addressing the most challenging scenario of continuous training, the requirements of other less demanding training scenarios can also be met.

With this background, the team will:

1. Perform Design-Space Exploration for DNN architectures/models, and hyper-parameters that are best suited for real world ADAS applications;

2. Perform Design-Space Exploration for DNN architectures that have a reduced number of weights. Fewer weights will results in DNN architectures that are faster to train (B2 above) and minimize communication requirements from client to cloud (B3 above);

3. Investigate weight/data compression techniques that further reduce the data required to represent weights; and

4. Investigate client/cloud computational trade-offs.

| Principal investigator | researchers | themes |

|---|---|---|

| Kurt Keutzer | Khalid Ashraf Forrest Iandola Matt Moskewicz | Deep Neural Nets Autonomous Vehicles |