Embedded In-Car AI Assistant: Efficient End-to-End Speech Recognition and Natural Language Understanding for Command Recognition at the Edge

ABOUT THIS PROJECT

At a glance

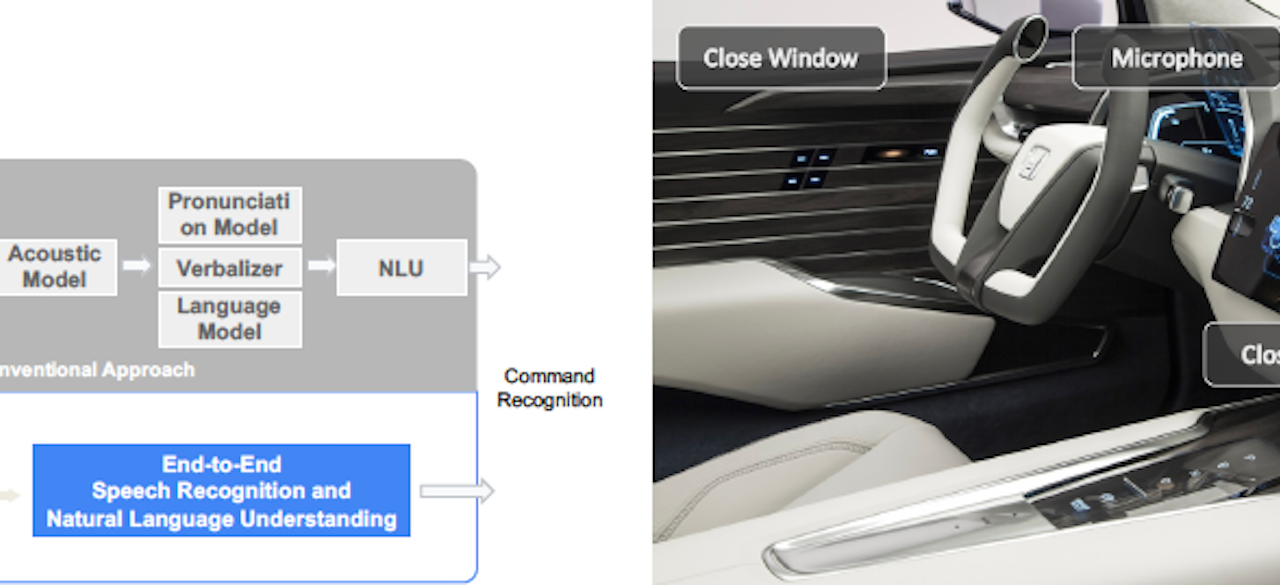

Recent advances in Deep Neural Network (DNN) based Natural Language Understanding (NLU) have significantly improved smart assistants. These systems have created a seamless user experience, which is now widely adopted in mobile phones and smart speakers. These successes motivate the application of NLU to more safety-critical use cases such as cars, where it is crucial that the driver be able to stay focused on the road and not have to deal with the complex infotainment interfaces. However, there are many barriers in deploying an accurate AI Assistant in cars. First, the current technology deployed by smart speakers requires constant cloud connection. This is due to the high computational costs required to process a user's audio signal, and subsequent NLU to find the intention or command of the user. Another important challenge is the large model sizes in the current state-of-the-art NLU phase, which uses the transformer models. These DNNs have a prohibitive memory for them to be deployed within the memory budget of the edge. To address these, we plan to pursue a multi-faceted approach to design an end-to-end system that can be efficiently deployed at the edge, within the hardware budget of typical autonomous driving cars. In particular, we will pursue the following directions. First, we will design and train an end-to-end model that receives user's acoustic data as input, and performs an integrated automatic speech recognition and NLU in one-pass. This can lead to orders of magnitude reduction in model footprint and improved latency, as compared to conventional two-pass solutions. This is due to the fact that the domain-specific commands used in the car have a much smaller range as compared to the complex intents that can be present in spoken language. Second, we will further optimize the previous prototype, through a differential Neural Architecture Search followed by compression (quantization, hierarchical pruning, and distillation). We will also apply domain-specific biasing by taking into account the context of the user (such as contact list names to increase the detection accuracy). Furthermore, we will co-design the solution for a target edge hardware used for autonomous driving. We will also perform direct deployment of the model and provide an end-to-end solution that can be used within the constraints of the edge (i.e. latency, power, and memory footprint). This project builds upon our recent works on efficient NLU models, and in particular Q-BERT~\cite{shen2020q}, I-BERT~\cite{kim2021bert,ibertcode}, and SqueezeBERT~\cite{iandola2020squeezebert}, as well as our works on NAS including Differentiable NAS~\cite{wu2018mixed,wu2019fbnet,fbnetcode}.

| principal investigators | researchers | themes |

|---|---|---|

Sehoon Kim Zhen Dong Sheng Shen | Natural Language Understanding, Automatic Speech Recognition, Neural Architecture Search, Edge, Embedded |

This project laid the groundwork for: In-Car AI Assistant: Efficient End-to-End Conversational AI System.