Explainable Deep Vehicle Control

ABOUT THE PROJECT

At a glance

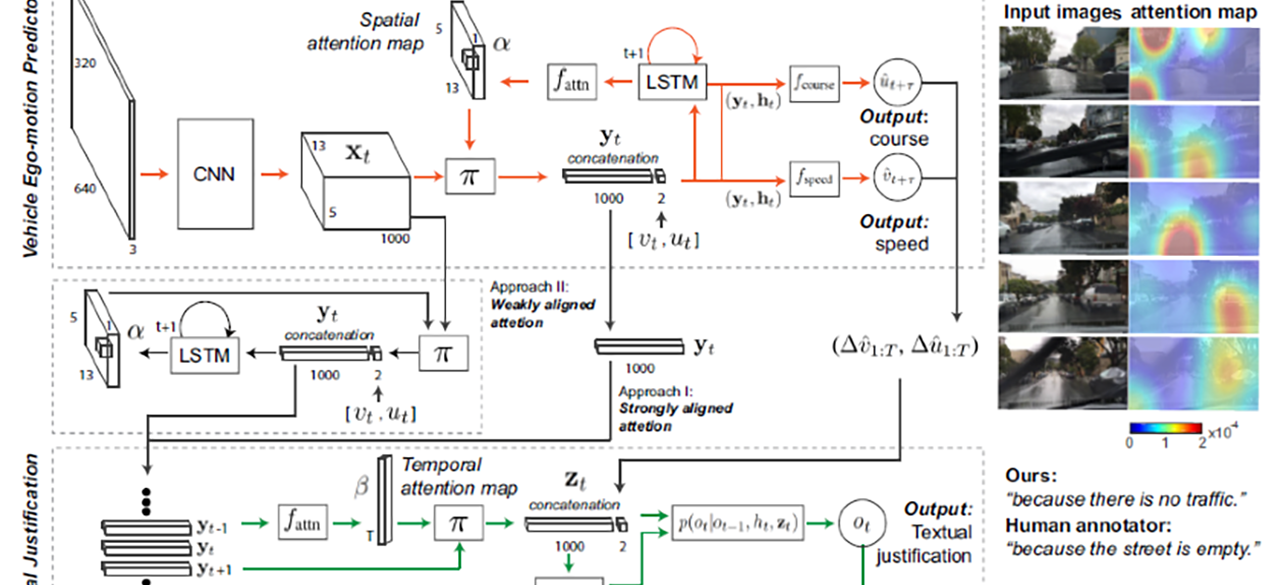

This project focuses on generating human-interpretable explanations for self-driving vehicles. It builds on our work that appeared at ICCV 2017, NIPS 2017 and ECCV 2018. Deep networks have shown promise for end-to-end control of self-driving vehicles, but such networks are quite cryptic. There are no interpretable states or labels in such a network, and representations are fully distributed as sets of activations. Explanations can be either rationalizations – explanations not grounded in the system’s behavior, or causal explanations – explanations that are based on the system’s internal state, and which ideally represent causal relationships between the system’s input and its behavior. Our work focuses on causal explanations, in order to help users better understand and anticipate the system’s behavior. For this project, we will develop attention models based on semantic segmentation. These should be easier for the user to interpret in visual form and should generate more accurate textual explanations.

| principal investigators | researchers | themes |

|---|---|---|

| John Canny | Deep learning, driving models, explainable models, interpretable models |