Hierarchical Reinforcement Learning & Abstraction Discovery

ABOUT THE PROJECT

At a glance

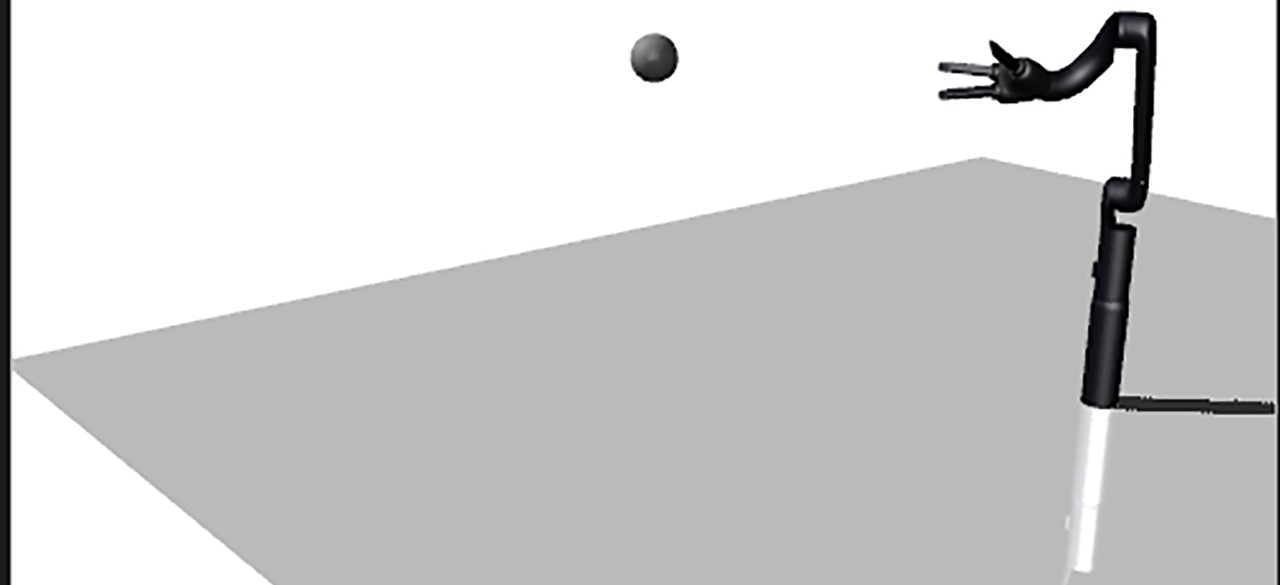

Humans perceive and organize the world into hierarchical and compositional structures, leading naturally to abstractions and high-level reasoning. For example, when we consider a complex manipulation task, such as cooking a meal, we understand that individual constituent steps of this task (e.g. cutting vegetables, stirring a pot) are abstract steps that can themselves be executed in a number of different ways, and we can seamlessly substitute a different low-level primitive (e.g. cutting with scissors instead of a knife) without disrupting the high level plan. Similarly, when driving, we can decouple the physical act of performing a maneuver from the higher-level act of navigation, so that we can reuse our navigation skills even when piloting a very different vehicle. Furthermore, when we seek to learn new behaviors, we can acquire a skill more quickly by attempting to reuse previously learned low-level skills, instead of directly attempting random low-level motions. Traditionally such hierarchical structure has been designed manually, by attaching labels to skills and objects in the world.

The aim of this project will be to develop hierarchical reinforcement learning methods that can be used to learn complex multi-stage skills without manual specification of hierarchies or intermediate steps, in order to create reusable components and modules that can be used to acquire new tasks more quickly, learn complex multi-stage behaviors, and achieve improved generalization in complex unstructured environments. We will focus on three core approaches: extracting hierarchies through architecture design and regularization, extracting hierarchies through affordances, and extracting hierarchies from natural language descriptions. By tackling the problem of hierarchies from several directions, we will mitigate the risks associated with such an ambitious project and maximize the chances of discovering an effective hierarchical RL technique.| principal investigators | researchers | themes |

|---|---|---|

| Sergey Levine | hierarchical reinforcement learning |