Improving the Scaling of Deep Learning Networks by Characterizing and Exploiting Soft Convexity

ABOUT THE PROJECT

At a glance

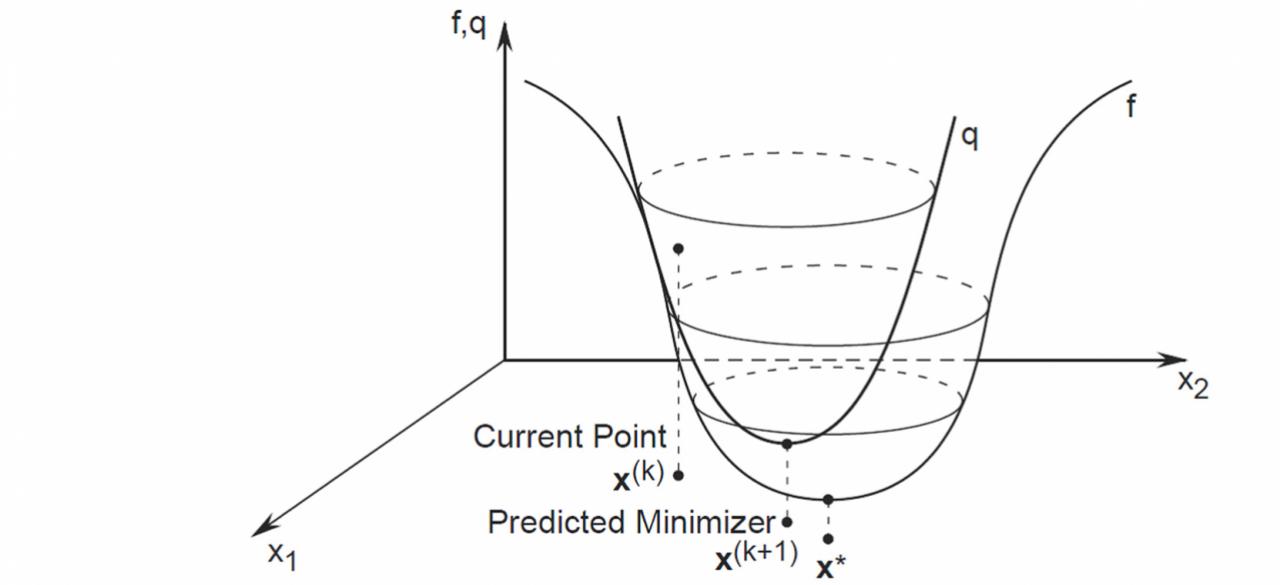

Although deep neural networks (DNNs) have achieved impressive performance in several non-trivial machine learning (ML) tasks, many challenges remain. One class of challenges has to do with understanding how and why DNNs obtain their improved performance, given that they do not exhibit properties such as convexity that are common among ML methods. A second class of challenges has to do with using this understanding to develop DNN methods that have better statistical/inferential properties and/or better algorithmic/running time properties. In this work, the team will pursue two related directions. First, to develop a model to make theoretically precise certain intuitions that might explain the performance of DNNs. The basic approach will be to use ideas from disordered systems theory and statistical mechanics to make precise the intuition that DNNs-because of all their “knobs" and how those knobs interact through multiple network layers- are “softly convex" in such a way that the “soft curvature" can be controlled by varying the knobs, e.g., the number of layers, filter dimensions, dropout rate, etc. Second, to use this soft convexity model to develop implementations with better scaling properties on modern computer architectures. The basic idea being that because the knobs interact in a way that leads to soft convexity of the penalty function landscape, if one adjusts the knobs to explore tradeoffs such as communication-computation tradeoffs, one can adjust other knobs to compensate. For example, to do so in such a way that over-fitting doesn't occur and that prediction quality is not materially sacrificed, as it would be in typical non-DNN-based algorithms.

The main goal of this project is to improve the scaling properties of DNNs through a non-traditional approach. In particular, the team will use ideas from statistical mechanics to define a notion of soft convexity, the soft curvature of which can be controlled by adjusting various knobs that define the DNN. The team intends to make this precise and to develop proof-of-principle implementations on image, video, and other related data to illustrate the benefits of this approach.

The main goal of this project is to improve the scaling properties of DNNs through a non-traditional approach. In particular, the team will use ideas from statistical mechanics to define a notion of soft convexity, the soft curvature of which can be controlled by adjusting various knobs that define the DNN. The team intends to make this precise and to develop proof-of-principle implementations on image, video, and other related data to illustrate the benefits of this approach.

| Principal investigators | researchers | themes |

|---|---|---|

| Michael Mahoney | Peng Xu Zhewei Yao Shusen Wang | Deep Neural Networks |