Interpretable and Data-Efficient Driving Behavior Generation via Deep Generative Probabilistic and Logic Models

ABOUT THE PROJECT

At a glance

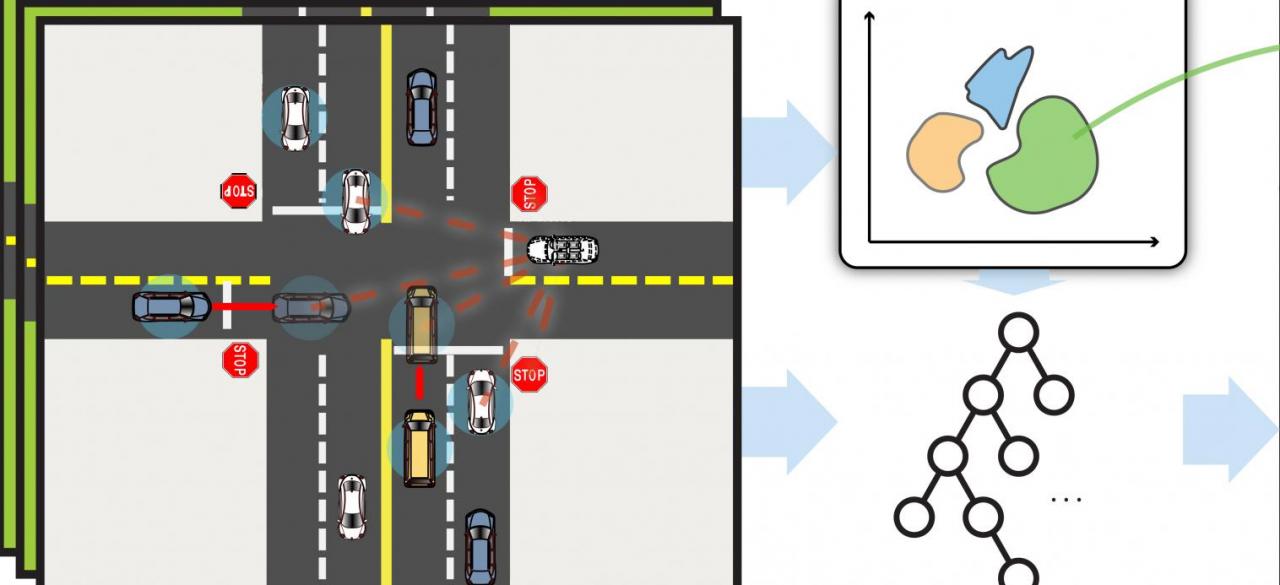

Deep neural network (DNN) has been yielding remarkable results for autonomous driving. However, when applied to safety-critical modules such as behavior generation in prediction and decision-making, DNN lacks interpretability and causality, and it is hard to incorporate domain knowledge. Also, huge amount of data is needed for DNN, and it is not well generalizable to unfamiliar scenarios, especially for corner cases. By contrast, generative probabilistic and logic models inherently hold interpretability, causality, domain knowledge and uncertainty modelling capability. However, most of them are not scalable and the representation capacity is relatively low. In this project, our goal is to design a hybrid framework which can exploit the advantages of DNN, as well as generative probabilistic models and logic models to enable interpretable and data-efficient behavior generation. In this framework, we propose a deep logic modelling method to generate driving behaviors for prediction and decision-making by using low-dimensional representation of the dynamic world obtained from generative probabilistic models and observations.

| principal investigators | researchers | themes |

|---|---|---|

| Masayoshi Tomizuka | Hengbo Ma, Jiachen Li and Yeping Hu | driving behavior, deep generative model, logic model |