Learning to Compile Mobile and Embedded Vision Code with Halide

ABOUT THE PROJECT

At a glance

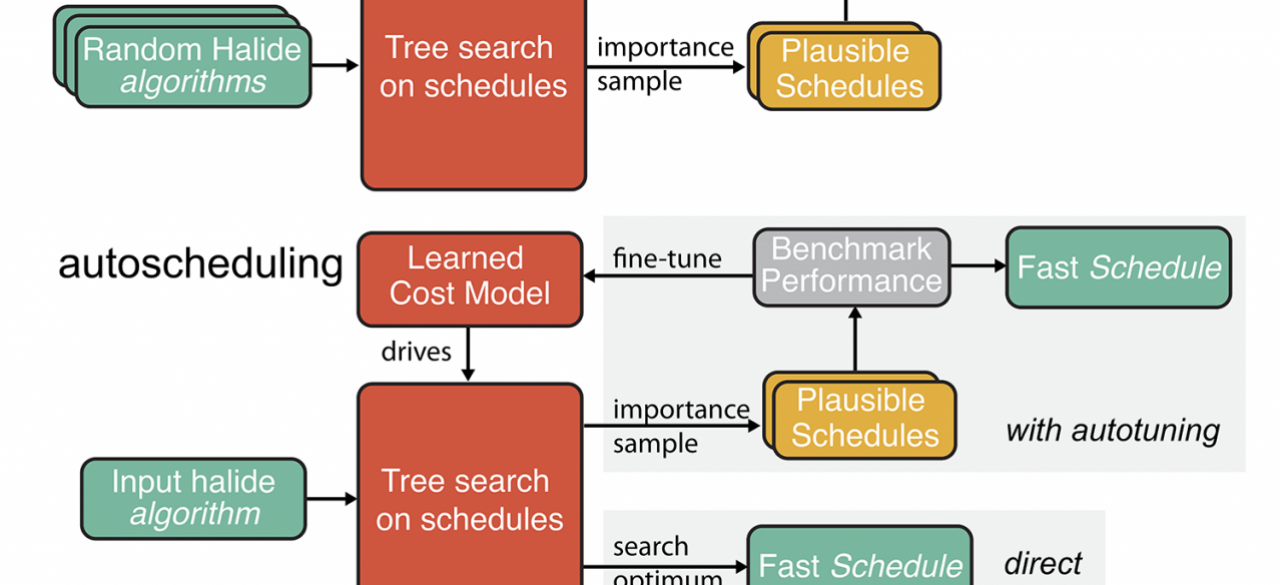

We propose a compiler to automatically optimize mobile and embedded vision models based on the Halide compiler stack. The need for efficiency is driving mobile vision architectures to increasingly resemble the workload characteristics of traditional image processing pipelines—streaming execution of large pipelines of relatively inexpensive individual operations—rather than the extreme per-layer arithmetic intensity of traditional convolutional neural networks. Traditional, layer library-based approaches to optimizing ML implementations can not achieve peak performance on these new workloads because of the need for global fusion and locality optimization across layers. We are instead building an automatic optimizer for these computations in Halide. It combines tree search over a large space of optimization choices with a learned cost model trained to predict performance of diverse vision and inference computations on real hardware targets (ARM, x86, GPUs, DSPs, programmable image processors, ML accelerators).

| principal investigators | researchers | themes |

|---|---|---|

| Jonathan Ragan-Kelley | Compiling neural networks, Efficient embedded inference, Halide |