Learning Urban Driving Policies from Online Traffic Webcams

ABOUT THE PROJECT

At a glance

Generating complex multi-agent driving behavior in urban intersections is challenging as driving can be difficult to model and program explicitly. We are interested in Deep Learning from Demonstrations from fallible human supervisors and the associated sample complexity for Deep learning models. As a source for realistic data, we propose to use online traffic camera streams to generate large training sets of driver trajectories to facilitate rapid prototyping of model-based planners and Deep Learning for driving policies.

O1: Extracting Trajectory Data from Traffic Camera Streams

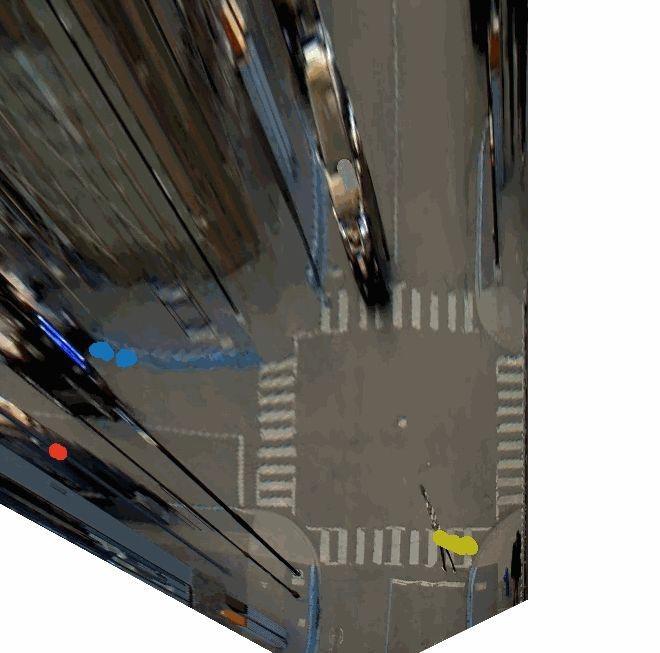

There are hundreds of online publicly-available traffic cam video streams such as the one above from Canmore, Alberta, which offers 24 live stream video of an intersection on YouTube. We will download the streaming data and use real-time computer vision tools to extract automobile and pedestrian trajectories. We have developed a prototype pipeline to extract such data using SSD [2] to extract the 2D position of the pedestrians and cars in the scene. We propose to leverage homography techniques and knowledge of the road dimensions to extract a top-down 2D perspective of the intersection as illustrated below.

Fig 2. Video of Extracted Trajectories

O2: Learn Reward Functions for Driving Behaviors

O1 will provide thousands of hours of trajectory data of multi-driver behavior at intersections. We will then use our first-order simulator with RRT (rapidly-exploring random trees) in a motion planning library such as OMPL, and apply techniques from Inverse Reinforcement Learning [3] to learn a sequence of reward functions such that the associated motion planner generates trajectories similar to those in the training set. Key challenges in this objective will be dealing with the noise associated with the extracted trajectories and registration of the simulator to the physical world.

O3: Compiling Driving Agents to Neural Networks with DART.

We expect that O2 will produce realistic driving behaviors. However because the results use an explicit multi-agent planning algorithm (RRT) algorithm they will be computationally slow. In O3 we will train CNN networks and deep policies leveraging our recent results with DART[4]: Disturbances to Augment Robot Trajectories. The potential advantage of training neural network policies is that they can be executed in near constant time to enable fast simulation of multi-driver behavior at traffic intersections.

| PRINCIPAL INVESTIGATORS | RESEARCHERS | THEMES |

|---|---|---|

| Ken Goldberg | Michael Laskey | webcams, urban driving dataset |