Meta Neural Architecture Search For Computer Vision

ABOUT THIS PROJECT

At a glance

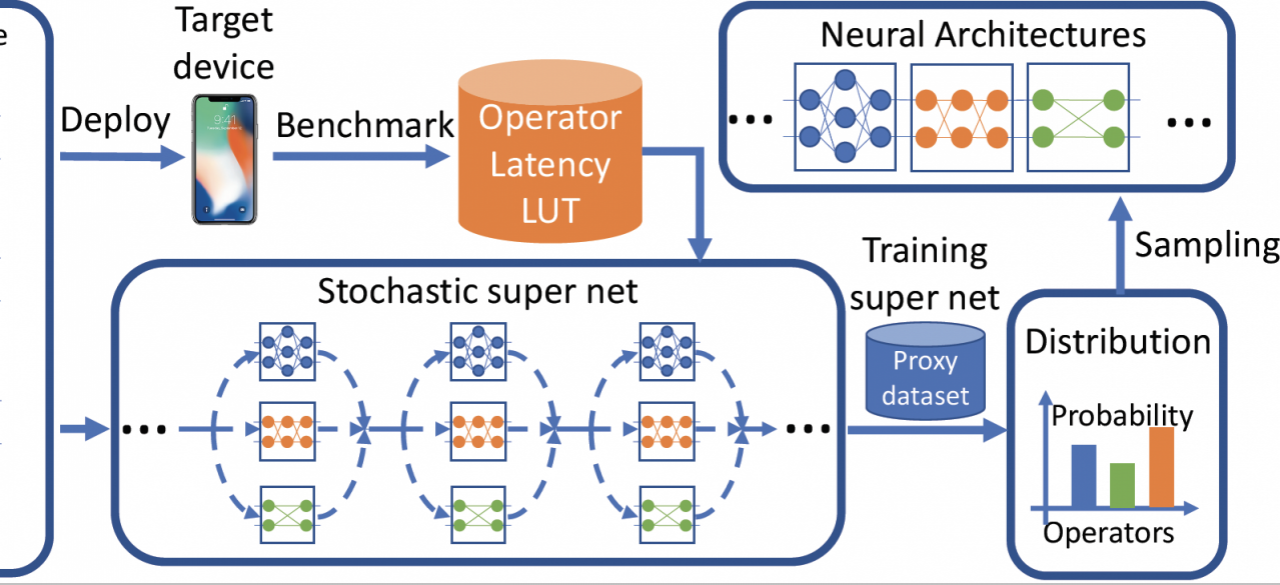

Many state-of-the-art algorithms for computer vision tasks such as classification, object detection, and semantic segmentation are based on Deep Neural Networks (DNNs). These tasks are critical in autonomous driving settings and highly efficient DNN architectures are required in order to deploy these DNNs in such settings. Neural architecture search (NAS) algorithms aim to automatically find appropriate DNN architectures for these tasks and deployment environments, e.g. by taking into account target hardware constraints. In this work, we propose to address two fundamental problems when applying NAS to autonomous driving systems: (i) the ability to transfer existing NAS to new domains and tasks with less labeling, and (ii) simultaneously applying NAS to multi-task and multi-domain settings. To address these problems, we propose to research and develop Meta Neural Architecture Search (M-NAS), which aims to learn a task-agnostic representation that can be used to improve the architecture search efficiency for a large number of tasks. The key idea for M-NAS is to treat a "super-network" as a meta architecture, where each sub-network is viewed as a component for a task/data-specific architecture, and then design a meta-search strategy to find the optimal architecture for each new task and data domain. In this formulation, the meta-search strategy can be used to simultaneously train and search a common architecture for multi-task and multi-domain environments, as well as provide a search strategy for additional training on new tasks and data domains.

| principal investigators | researchers | themes |

|---|---|---|

| Kurt Keutzer | neural architecture search (NAS), meta learning, multi-task NAS, efficient deep neural networks, expanded deep neural network search spaces |

This project is built upon the work of the project: Unified Neural Architecture Search Framework for Computer Vision.