Model-Based Reinforcement Learning

ABOUT THE PROJECT

At a glance

Motivation: In the past decade, there has been rapid progress in reinforcement learning (RL) for many difficult decision-making problems, including learning to play Atari games from pixels [1, 2], mastering the ancient board game of Go [3], and beating the champion of one of the most famous online games, Dota2 (1v1) [4]. Part of this success is due to the computational ability to generate trillions of training samples in simulation. However, the data needs of model-free RL methods are well beyond what is practical in physical real-world applications such as robotics. One way to extract more information from the data is to instead follow a model-based RL approach. While model-based RL remains to be proven as capable as model-free RL, model-based RL carries the premise of being far more data efficient than its model-free counterparts [5, 6, 7, 8].

Goal: Enable model-based RL to learn policies that are as good as the ones learned with model-free RL, while requiring less real-world interaction.

Background, Preliminary Work and Plans:

Model-based RL iterates the following three main steps: collecting data, fitting a model, and optimizing for a policy. This process is repeated until the task is solved. When applying this framework, however, the model tends to be inaccurate (or even blatantly wrong) where the data is sparse. Naively optimizing the policy under the fitted model leads to exploiting mismatches between fitted model and real world, which in turn results in policies that perform poorly in the real world. This problem is called model bias [6] and is fundamental to the fact that current model-based RL methods end up finding worse policies than model-free methods.

We will be investigating two orthogonal (and complementary) approaches to address the model-bias problem that’s troubling current model-based RL methods: (i) Model-ensembles; (ii) Meta Reinforcement Learning.

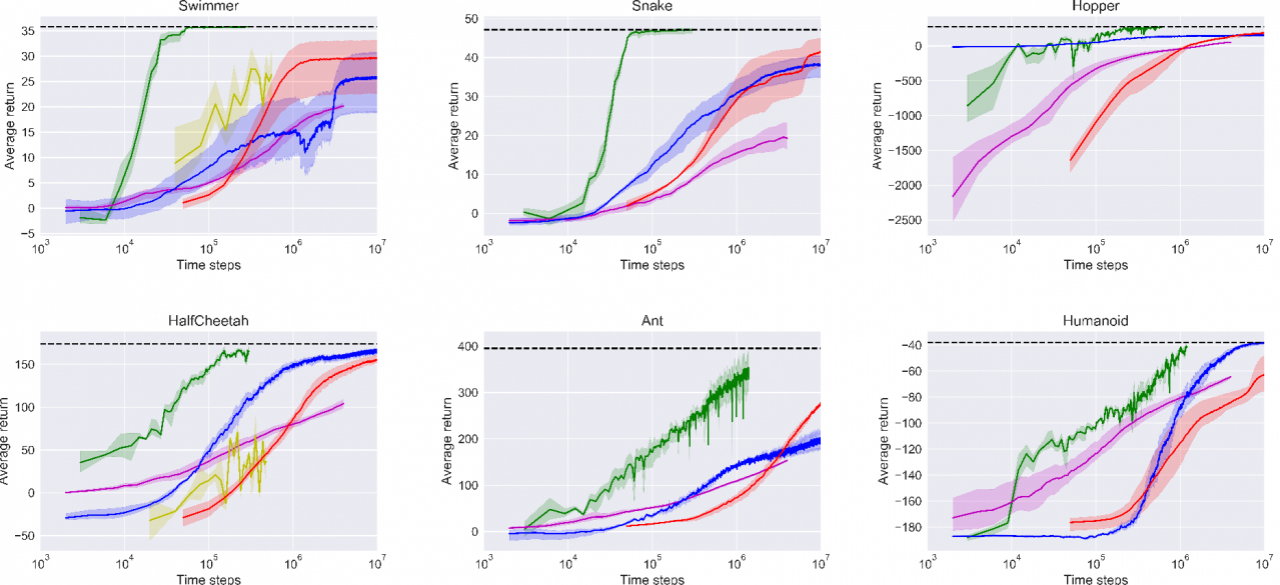

Model-ensembles: We will be investigating a new solution to the model bias problem by bootstrapping many deterministic neural network models. Together they can represent a stochastic model that captures uncertainty. We will investigate model-based RL approaches that take advantage of this ensemble to avoid overfitting of the current policy to any particular model (hence avoiding the model bias problem). We will also investigate different policy optimization methods to be used in the simulator. Initial results indicate that model-free optimization approaches like TRPO / A3C / DQN / PPO are more robust optimizers than standard model-based policy optimization with backpropagation through time. We will study these differences.

Meta Reinforcement Learning: Model bias is the inevitable discrepancy between a learned dynamics model and the real world. Traditional model-based RL uses this imperfect model to train policies, and hence as long as there is a mismatch, the policy will have difficulties carrying over to the real world. To overcome this, we propose to instead use the learned dynamics models as environments in which to train a meta reinforcement learning algorithm, which learns how to quickly adapt to environment changes between learned simulation environments. Then, after meta-learning in simulation, we can expect to be able to adapt more quickly to the real world. Meta reinforcement learning has seen success across a range of environment distributions, e.g. multi-armed bandits, simple mujoco environments (cheetah, ant), and first-person vision based maze navigation [10,11,12]. In this past work the transfer was from one random simulated environment to another one. Here we will be working towards such transfer from a large ensemble of simulated environments to the real world. The latter has already seen various promising results in non-RL settings [13,14,15,16].

References

[1] Mnih, Volodymyr, et al. "Playing atari with deep reinforcement learning." arXiv preprint arXiv:1312.5602 (2013).

[2] Mnih, Volodymyr, et al. "Human-level control through deep reinforcement learning." Nature 518.7540 (2015): 529-533.

[3] Silver, David, et al. "Mastering the game of Go with deep neural networks and tree search." Nature 529.7587 (2016): 484-489.

[4] OpenAI. “Dota 2.” OpenAI Blog, OpenAI Blog, 11 Aug. 2017, blog.openai.com/dota-2/.

[5] Atkeson, Christopher G., and Juan Carlos Santamaria. "A comparison of direct and model-based reinforcement learning." Robotics and Automation, 1997. Proceedings., 1997 IEEE International Conference on. Vol. 4. IEEE, 1997.

[6] Deisenroth, Marc, and Carl E. Rasmussen. "PILCO: A model-based and data-efficient approach to policy search." Proceedings of the 28th International Conference on machine learning (ICML-11). 2011.

[7] Deisenroth, Marc Peter, Dieter Fox, and Carl Edward Rasmussen. "Gaussian processes for data-efficient learning in robotics and control." IEEE Transactions on Pattern Analysis and Machine Intelligence 37.2 (2015): 408-423.

[8] Nagabandi, Anusha, et al. "Neural Network Dynamics for Model-Based Deep Reinforcement Learning with Model-Free Fine-Tuning." arXiv preprint arXiv:1708.02596 (2017).

[9] Brockman, Greg, et al. "OpenAI gym." arXiv preprint arXiv:1606.01540 (2016).

[10] Duan, Yan, et al, “Fast Reinforcement Learning via Slow Reinforcement Learning.” arXiv preprint: arXiv: 1611.02779 (2016)

[11] Finn, Chelsea, et al. “Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks.” ICML 2017, arXiv: 1703.03400

[12] Mishra, Nikhil, et al. “Meta-Learning with Temporal Convolutions.” arXiv: 1707.03141

[13] Tzeng, Eric, et al. “Deep Domain Confusion: Maximizing for Domain Invariance.” CVPR 2015, arXiv: 1412.3474

[14] Sadeghi, Fereshteh, et al. “CAD2RL: Real Single-Image Flight without a Single Real Image.” RSS 2017, arXiv: 1611.04201

[15] Tobin, Josh, et al “Domain Randomization for Transferring Deep Neural Networks from Simulation to the Real World.” IROS 2017, arXiv 1703.06907

[16] Peng, Xue Bin, et al “Sim-to-Real Transfer of Robotic Control with Dynamics Randomization.” arXiv 1710.06537

| principal investigators | researchers | themes |

|---|---|---|

| Pieter Abbeel | Thanard Kurutach, Carlos Florensa, and Ignasi Clavera | Model-based Reinforcement Learning, Policy Optimization, Meta-learning |