Offline Reinforcement Learning for Data-Driven Autonomous Driving

ABOUT THIS PROJECT

At a glance

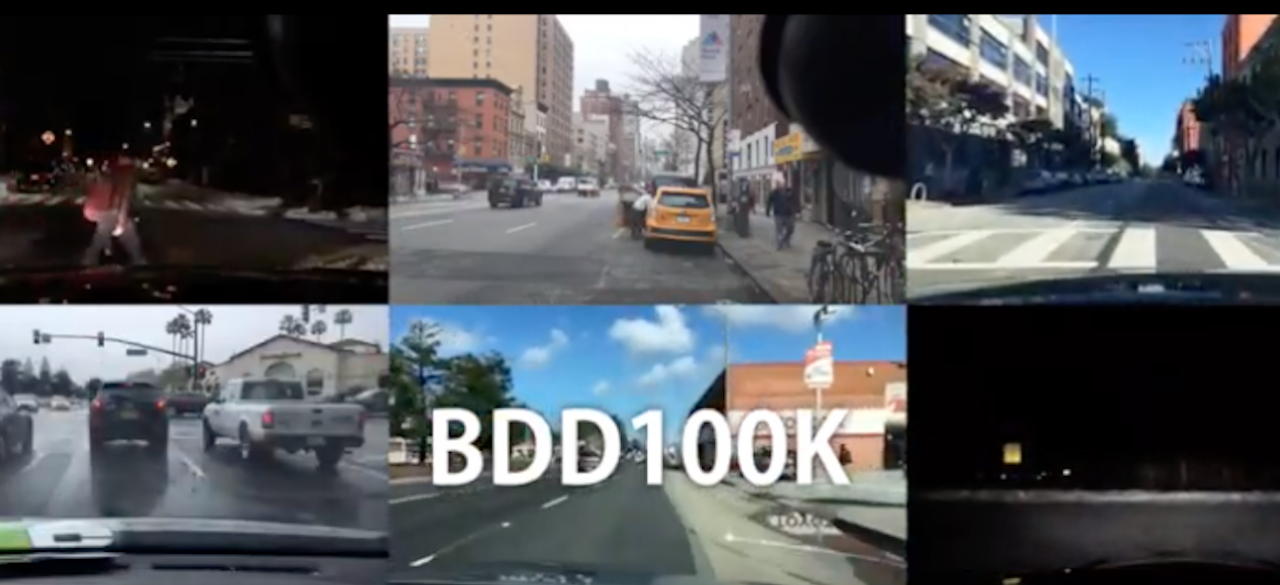

Reinforcement learning has emerged as a highly promising technology for automating the design of control policies. Deep reinforcement learning in particular holds the promise of removing the need for manual design of perception and control pipelines, learning the entire policy -- from raw observations to controls -- end to end. However, reinforcement learning is conventionally regarded as an online, active learning paradigm: a reinforcement learning agent actively interacts with the world to collect data, uses this data to update its behavior, and then collects more data. While this is feasible in simulation, real-world autonomous driving applications are not conducive to this type of learning process: a partially trained policy cannot simply be deployed on a real vehicle to collect more data, as this would likely result in a catastrophic failure. This leaves us with two unenviable options: rely entirely on simulated training, or manually design perception, control, and safety mechanisms. In this project, we will investigate a third option: fully off-policy reinforcement learning, also referred to as offline reinforcement learning. Our recent work has seen the development of radical new algorithms for offline RL that substantially improve on the state of the art in reinforcement learning from logged data [1, 2], which I discuss in this recent article. The aim of this project will be to extend and apply these techniques to learning driving policies, both in a simulated evaluation using Carla, and using real data in the BDD100k dataset. To our knowledge, this would be the first attempt at large-scale offline reinforcement learning for a realistic autonomous driving application. If successful, this research would yield an entirely new way to acquire autonomous driving policies that are trained end-to-end, without the dangers of real-world RL, the engineering cost of sim to real transfer, or the data collection burden of imitation learning (since offline RL can learn from suboptimal data).

| principal investigators | researchers | themes |

|---|---|---|

| Sergey Levine | autonomous driving, reinforcement learning, deep learning |