Principled Defenses Against Adversarial Attacks on Deep Learning

ABOUT THIS PROJECT

At a glance

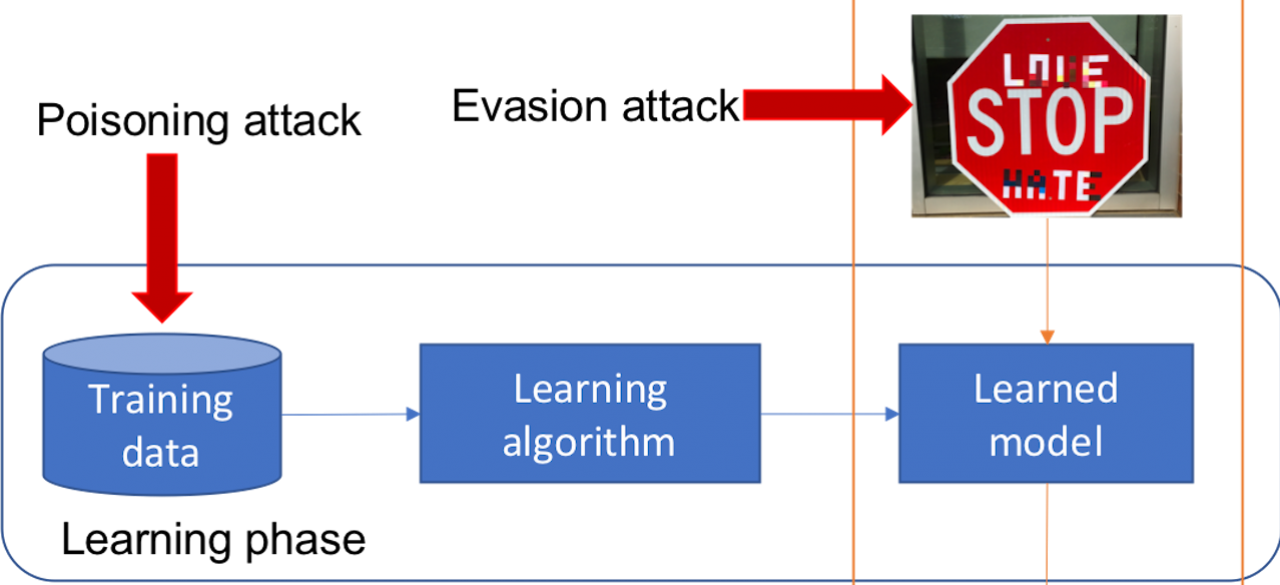

In this project, we propose to provide theoretical foundations for secure learning systems and investigate principled methods to improve model robustness in diverse real-world applications. The scope of the project will cover both data poisoning and evasion attacks. We will contribute several defense strategies to make the system more robust in the BDD Code Repository as well as benchmark datasets for robustness evaluation.

| PRINCIPal investigators | researchers | themes |

|---|---|---|

| Dawn Song | Ruoxi Jia Xinyun Chen | adversarial machine learning, evasion attacks, data poisoning attacks, defense |