Real-time and Accurate Object Detection through Systematic Quantization of Transformer and MLP-based Computer Vision Models

ABOUT THE PROJECT

At a glance

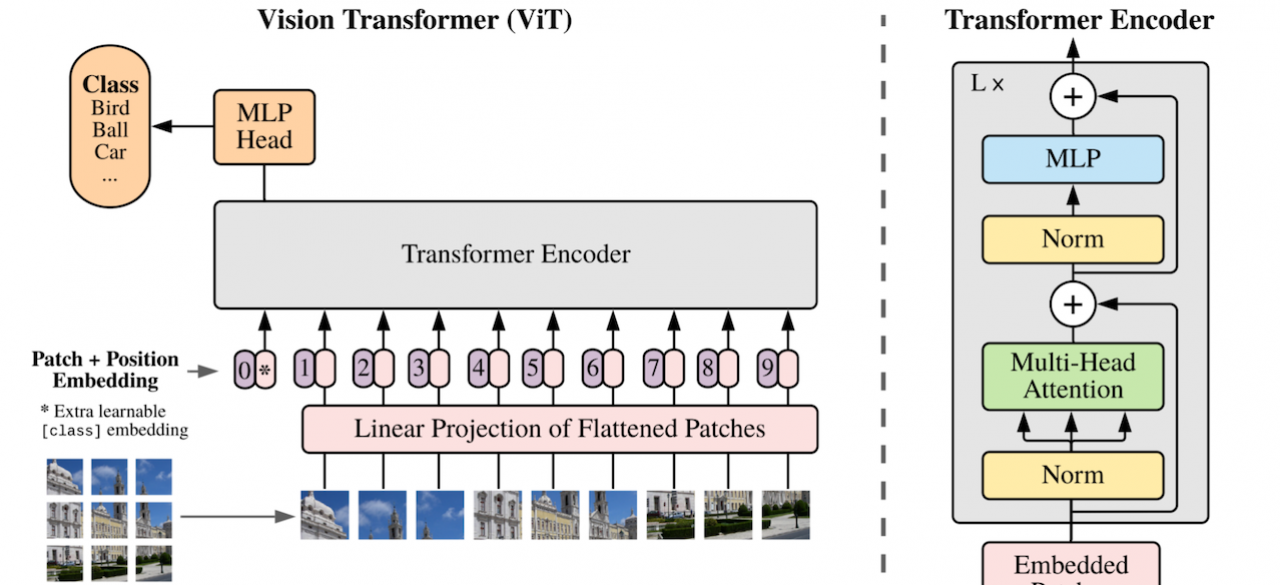

Mainstream Deep Learning research focuses on improving the accuracy of neural networks (NNs); however, most real-world applications have real-time constraints which enforce latency contraints on the inference of the NN. In particular, in autonomous driving applications it is absolutely essential that NNs meet their latency contraints. To compound this challenge, many automotive applications must run on edge processors with limited computational power. For this reason, practitioners have been forced to use shallow networks with non-ideal accuracy to avoid the latency problem. One promising solution to this problem is to quantize the weights and activations of a NN model, so that it be fit into an embedded hardware and provide additional opportunities for parallel execution. However, most current quantization methods involve random heuristics and require high computational cost associated with brute-force searching. In addition, these meth- ods typically target CNN-based compact models, while recent state-of-the-art NN on vision tasks have more parameters, and many are based on the transformer [1,2] or the MLP [3,4] architectures. Our goal here is to use second-order information (Hessian) to systematically quantize the NN model. So far we have developed two Hessian-based quantization frameworks, HAWQ [5,6] and ZeroQ [7,8]. In this proposal we plan to extend these frameworks by: (i) developing a better quanti- zation strategy for large neural networks with state-of-the-art performance on object detection, (ii) developing quantization- friendly operations in transformer-based or MLP-based neural network models, (iii) extending ZeroQ/HAWQ to automatically decide the neural architecture together with the quantization bitwidth, and (iv) extending ZeroQ/HAWQ framework to ultra- low precisions such as binary or ternary quantization.

| principal investigators | researchers | themes |

|---|---|---|

| Kurt Keutzer | Real Time Object Detection, Transformers, MLP-Based Models, Quantization, Compression, TinyML |

This is a continuation of the completed project: "Efficient Deep Learning for ADAS/AV Through Systematic Pruning and Quantization".