Real-time, Energy Efficient Video Object Detection and Tracking

ABOUT THE PROJECT

At a glance

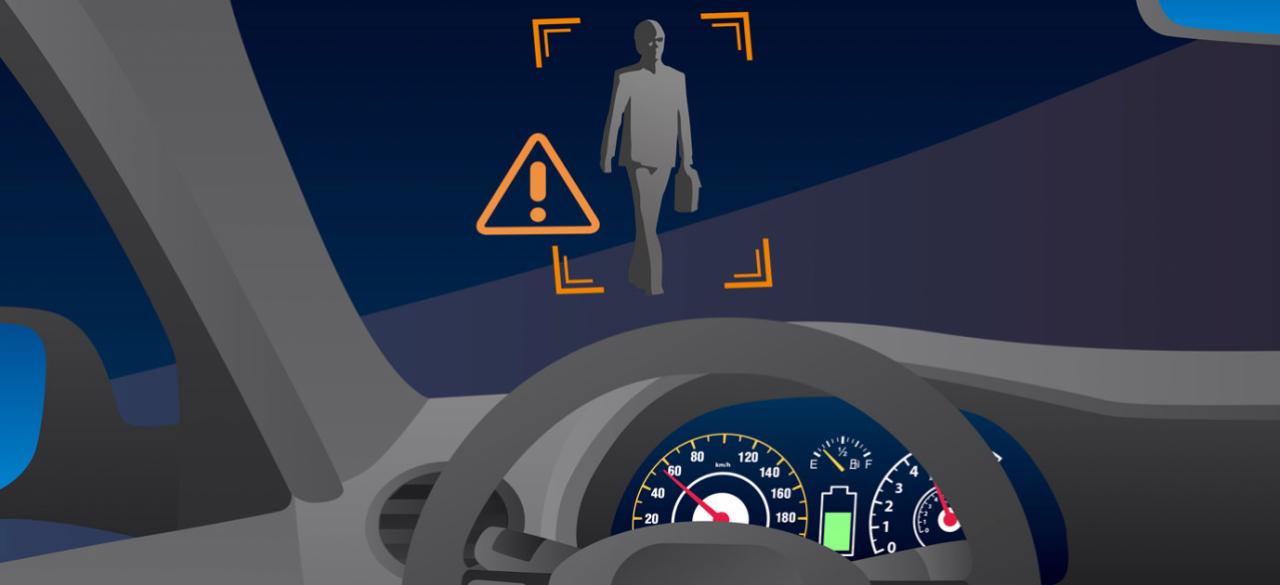

Object detection and tracking are fundamental problems in the computer vision community and are fundations for autonomous driving perception. In recent years, we have witnessed remarkable increases in accuracy of object detection through the use of Deep Neural Networks. However, previous efforts have been primarily focusing on: a) improving accuracy measured by bounding box overlapping (intersection-over-union), and b) detection on static images. In contrast, what is needed for autonomous driving applications are models that are able to perform accurate detection and tracking on video sequences in real-time, and to perform these computations in an energy efficient manner. We plan to explore the following three aspects:

a) From static image to videos: with video object detection we can better utilize spatial-temporal information of videos to perform more accurate, consistent and robust detection on each frame and potentially save redundant computations.

b) From detection to tracking. Tracking can provide historical trajectories of other traffic objects, which are important for behavior prediction for other objects and essentially trajectory planning for the ego vehicle.

c) Efficient neural network architectures for spatial-temporal modelling. Autonomous driving requires the perception model to achieve real-time inference speed (>25 frames per second) and low power consumption (~20W). Therefore, we would like to explore efficient architectures to model spatial-temporal evolution of videos.

Research agenda and challenges

In our previous project funded by BDD, we proposed SqueezeDet [Wu2016], a convolutional neural network (CNN) for image-based object detection that achieved remarkable latency and energy reduction compared with previous works. In this project, we propose to extend our work on designing fast energy-efficient Neural Nets (NN) from images to videos. We will focus on the following three aspects:

Efficient neural networks for spatial-temporal modeling: Video based detection and tracking tasks require us to utilize both spatial and temporal characteristics. The common approach to model temporal characteristics is to use recurrent neural networks (RNN). However, most of the state-of-the-art RNN models (LSTM, GRU) require much more computing resources than CNN models. Therefore, our goal is to design efficient neural network (NN) architectures to model spatial-temporal evolution with reduced computational cost, smaller model size, and reduced power consumption.

Video object detection: Given the energy, speed, and model size advantage of SqueezeDet, it is natural for us to choose SqueezeDet as the foundation and extend it to video object detection. Our effort in video object detection can be summarized in three aspects: 1) We will integrate the proposed spatial-temporal architecture to SqueezeDet to enable it to capture temporal evolutions. 2) We will design loss functions to induce the network to generate accurate and stable detection results. 3) We will explore intuitive and reasonable metrics to evaluate both accuracy and consistency of video object detection performance.

Tracking: To best leverage SqueezeDet’s faster-than-real-time inference speed, we will adopt the tracking by detection [Breitenstein2009] approach, where the goal is to associate detections between frames to the same entities. In previous works [cvlibs2014], entity associations are rule-based algorithms and are performed as a separate, post-processing step after detection. In this research, we plan to integrate association and detection as a unified learning problem that can be trained end-to-end. Furthermore, we plan to formulate the process of entity association, a core problem in tracking, using conventional NN operations in order to best leverage existing efforts of hardware optimization.

| principal investigators | researchers | themes |

|---|---|---|

| Kurt Keutzer | Efficient neural nets, tracking, video object detection |