Sequence Modeling as Reinforcement Learning

ABOUT THE PROJECT

At a glance

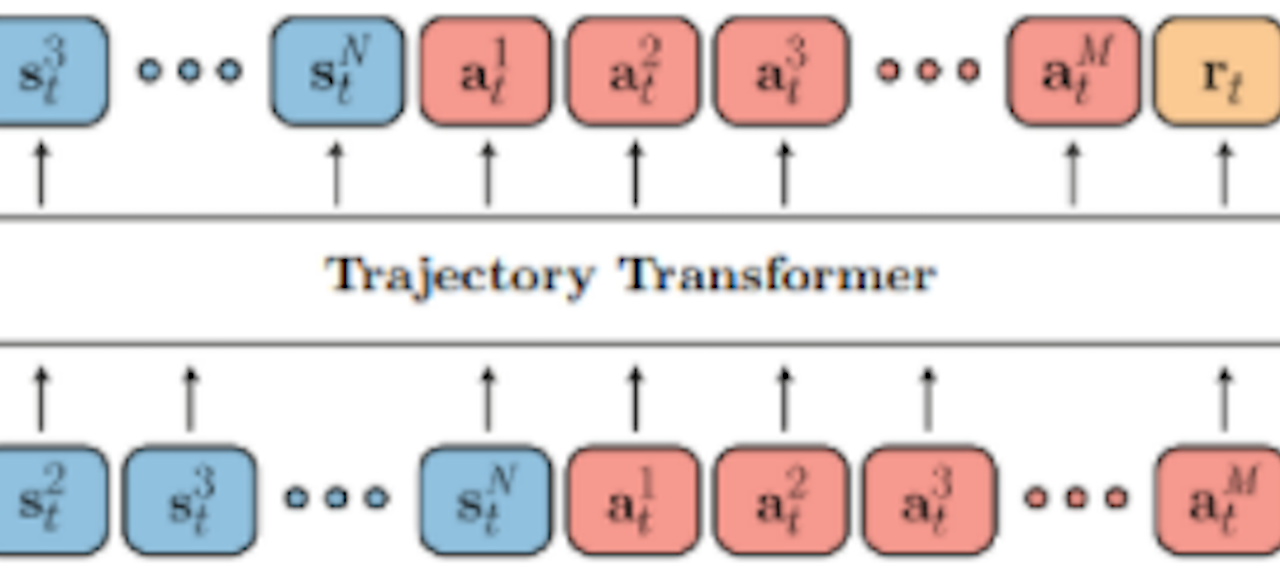

Reinforcement learning is typically approached from the perspective of value estimation or policy gradients. However, at its core, we can also regard reinforcement learning as essentially a sequence modeling problem: the goal is to determine a sequence of actions from sequentially observing states that lead to a desired outcome. This perspective on the reinforcement learning problem is interesting because recent years have seen tremendous advances in sequence modeling (e.g., language modeling, Transformers, GPT-3, etc.), and the prospect of leveraging such advances to enable simpler and more scalable RL techniques is highly appealing. Indeed, such methods could enable a set of capabilities that may make reinforcement learning significantly more practical to apply to autonomous driving applications: effective sequence models applied to driving data would provide not only a way for autonomous vehicles to make effective decisions about what to do to attain navigational goals, but also learned “agent simulators” that can be used to forecast the behavior of other vehicles on the road, and even model other agents such as pedestrians. The fact that all of this could be done with essentially the same set of algorithmic and modeling tools is also highly desirable. Thus, the goal of this research project will be to develop a framework for reinforcement learning for autonomous driving applications centered around sequence modeling, and evaluate this framework simultaneously for model-based RL and for multi-agent forecasting applications, including forecasting of other vehicles and pedestrians.

| Principal Investigators | Researchers | Themes |

|---|---|---|

| Sergey Levine | Transformers, sequence modeling, reinforcement learning, forecasting, prediction, pedestrian prediction, vehicle prediction |