Test-time Training for Adaptive and Continuous Learning

ABOUT THIS PROJECT

At a glance

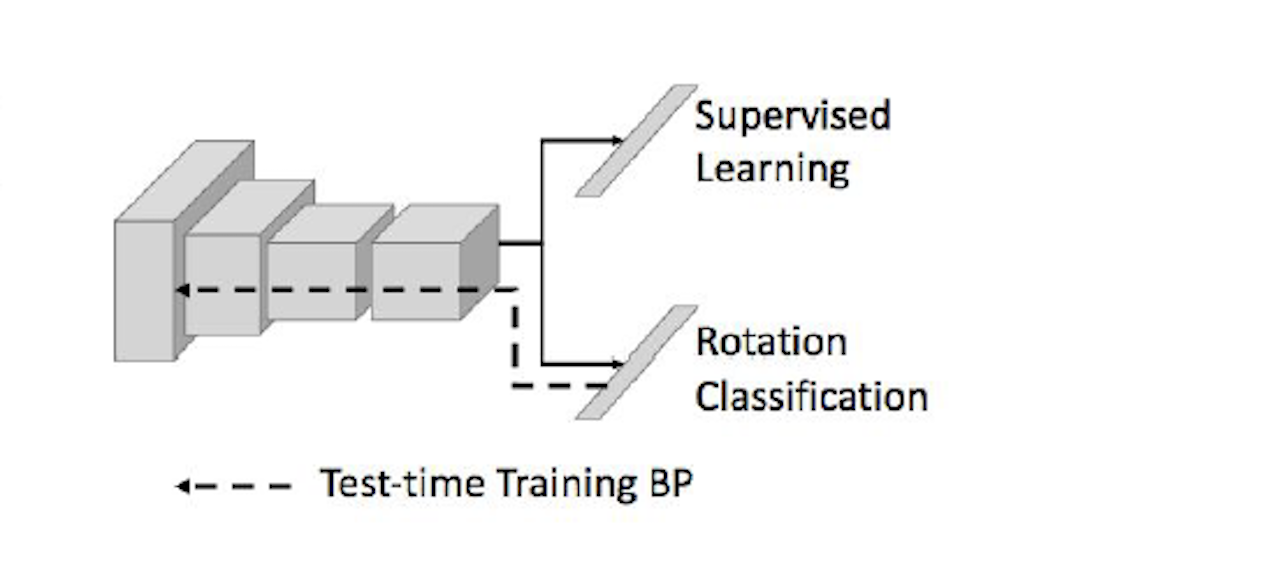

This proposal argues that using a fixed training set is fundamentally flawed for building robust and adaptable models. Instead, our artificial agents should follow the example of real agents and train on a continus, never repeating, and never ending stream of input data. Each data sample is first used as test data, then as training data, and then it is permanently discarded. This “use training sample only once” philosophy not only prevents overfitting, but also allows for continuous adaptation in an ever-changing environment that is our real world. We believe that this kind of continuous test-time training (related to Michal Irani’s idea of “internal learning”), can be critically important for a number of practical auto-motive applications. In this proposal, we will focus on three: 1) continuous domain adaptation, 2) test-time adaptive image alignment, and 3) test-time disentanglement.

| principal investigators | researchers | themes |

|---|---|---|

| Alexei Alyosha Efros | continuous learning, domain adaptation, test-time training, disentanglement |