Testing and Verification of Safe Network-Based Driving Algorithms

ABOUT THE PROJECT

At a glance

1. Background and Motivation

In risk assessment of automotive systems, safety consideration is most important. While deep neural networks have great potentials for autonomous driving, the challenge remains to ensure safety of intended functions, even when the network may not be fully understood. We propose to structure such a network-‐‑ based driving system hierarchically so that a supervisory validation-‐‑layer can provide oversight and govern the overall safety integrity. Functional blocks can be further modularized to offer parallel pathways for dealing with various situations. For example, a module suited for performing in uncharted conditions such as unmarked and uneven domains with a deep learning concept can co-‐‑exist with a classical module that can perform with highly reliability in more structured environments such as highway driving.

2. Research Goal

We will explore how to integrate different approaches to benefit from their complementary features and redundancy that can be elevated by constructing the proposed framework. In the meantime, our study will perform comparative evaluations to investigate how a level of trust and confidence can be defined for each sub-‐‑system, traced for the system as a whole and how capability of self-‐‑diagnostics can be established.

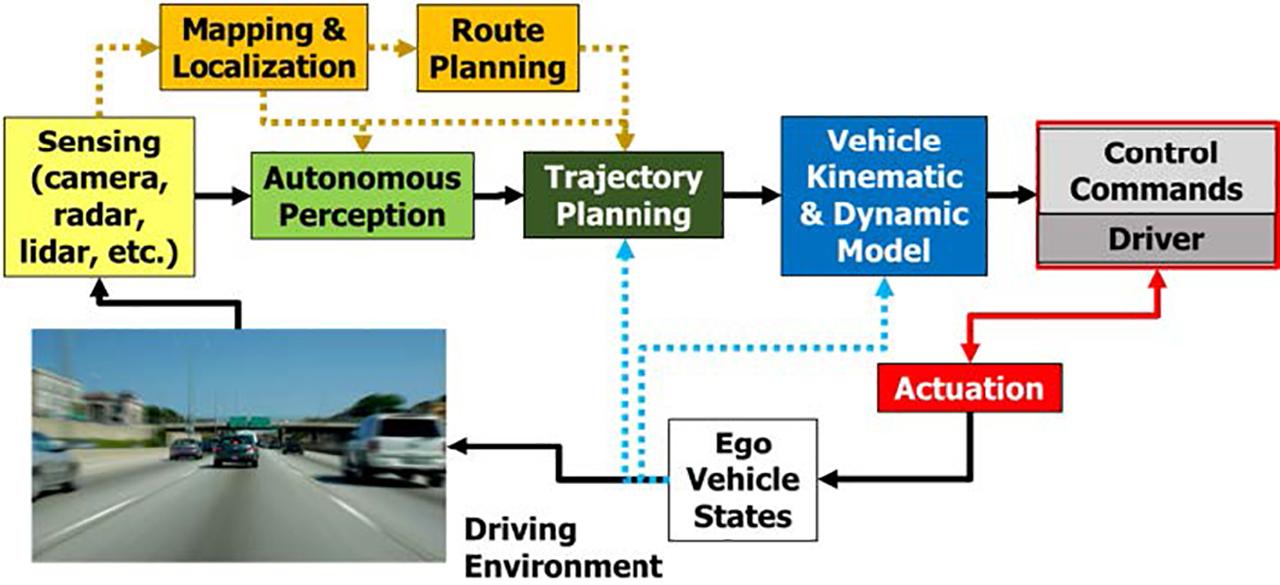

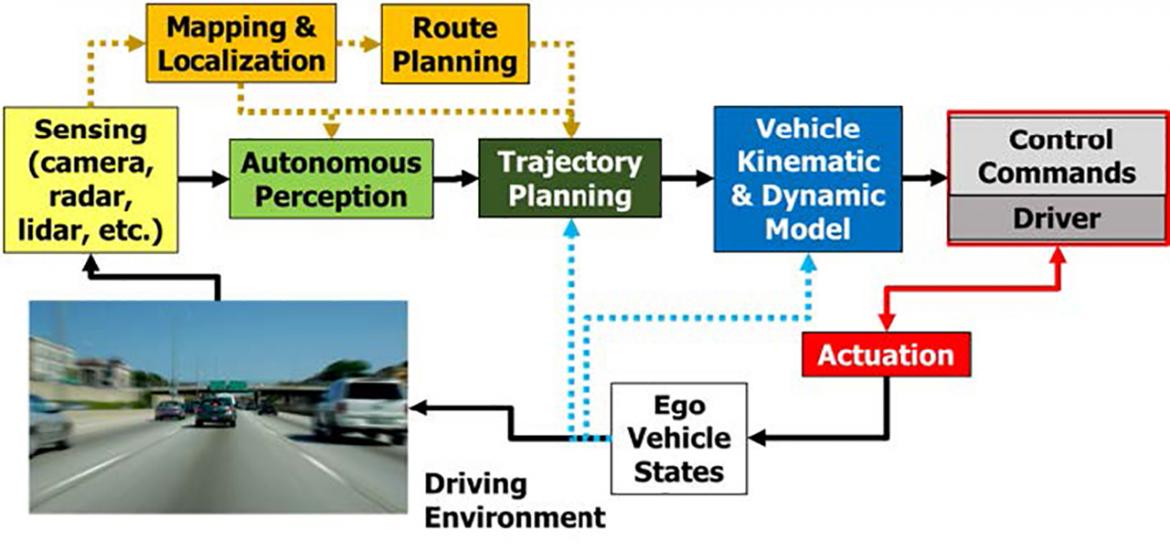

Figure: Functional Block Diagram of an Automated Driving System

3. Framework for Comparative Evaluation of Functional Modules

In a system of modules, their hierarchical and parallel co-‐‑existence allows comparative evaluation of one network-‐‑based and one classical planning-‐‑based approach. All divisible parts of the network-‐‑based driving approach will be designed to output a measure of their confidence. In the case of scene parsing, it can be implemented by comparing the resemblance to scenes in the training set [1] while in the classical planning case a failure of detecting lane markings for example would contribute to lower the confidence level. Measures of safety performance also need to be investigated for other relevant automated driving functions. The main components of our proposed framework will include the following.

- One classical system that includes the functional modules for detection, trajectory planning, vehicle model, and operational control, capable of executing designated scenarios.

- This classical system essentially contains all functional blocks in the diagram above. We plan to leverage open-‐‑sourced resources, such as those provided by AUTOWARE[2] and existent BDD work.

- In the classical approach, the sensors may include those available on the BDD experimental vehicles, such as lidar, radar, cameras, IMU, and DGPS. Each mode of sensor data has an associated quality measure and based on the individual sensor readings a common confidence function has to be designed.

- A BDD Sandbox project is expected to commerce in 2018, which will be leveraged if it becomes available.

- One machine learning (ML) network-‐‑based module capable of deciding and executing driving maneuvers in unstructured environments. An investigation will determine how confidence and reliability can be reported in each of its building blocks:

- Scene Understanding, regardless of the ML technique, the scene understanding has to be made transparent to be comparable through the validation module. Some elements in the scene-‐‑parsing module may utilize existent work such as a rule-‐‑based method [3].

- Policy based driving, where approaches as end-‐‑to-‐‑end trajectory planning and reinforcement learning will be evaluated in order to map sensory input to a desired trajectory by network inference, such as in previous work in BDD[4,5].

- One validation-‐‑layer which monitors scene understanding, confidence level and output of the underlying modules. It will create an independent best-‐‑estimate environment model to judge redundantly the scene comprehension of other modules. Furthermore, it will monitor the internal confidence level of individual modules and rate the safety of their output, which will take the form of complete suggested future trajectories. It’s functionality will combine scene comprehension validation with fail-‐‑state checks based on module-‐‑specific performance functions.

4. Research Methodologies and Scope

We will use the facilities of the Richmond Field Station (RFS), including test track and selective drivable areas for a progressive plan of virtual, synthesized, and actual testing. The research plan include the following tasks:

- Define driving scenario at RFS, driving from selective well-‐‑marked streets to a less structured path.

- Implement two different autonomous driving modules, classical and network-‐‑based, as described in the previous section. Existent work from BDD and elsewhere, on detection, trajectory planning, scene parsing, end-‐‑to-‐‑end learning, and RL control will be leveraged.

- Design the validation layer to integrate the individual confidence level of the driving modules and determine the overall system reliability.

- Design the individual confidence functions for all parts of the driving modules and introduce additional measures into the design of the modules where this is necessary. Existing approaches in e.g. novelty detection as in [1] will be leveraged.

- Build a simulation model to represent the driving path into a virtual environment, which is built with CARLA[6] or a 3-‐‑D model based on open-‐‑sourced mapping tool.

- Use the BDD Lincoln MKZ or the Hyundai Genesis as the representative vehicle model.

- Perform simulations to test the driving models with the designated driving scenarios and expand to various virtual testing scenarios.

In the future extension, actual testing with experimental vehicles will be conducted after the minimum-‐‑risk controller is validated in simulation and controlled testing.

5. Anticipated Contributions to Berkeley Deep Drive Code Repository, Data and Software

The team will use existent high-‐‑performance workstations and leverage BDD resources, such as the BDD Sandbox project and the experimental vehicles. The project is expected to generate the following outcome to add to the BDD repository: A description of techniques and code structure that is required to implement the conceptual framework and software for the project tasks.

6. Reference

- C. Richter, N. Roy. Safe Visual Navigation via Deep Learning and Novelty Detection, Robotics: Science & Systems, 2017

- AUTOWARE, https://github.com/CPFL/Autoware

- C. Chen, et al. DeepDriving: Learning affordance for direct perception in autonomous driving, IEEE ICCV, 2015.

- H. Xu, et al., End-‐‑to-‐‑end Learning of Driving Models from Large-‐‑scale Video Datasets, IEEE CVPR 2017

- P. Wang, C-‐‑Y Chan, Formulation of Deep Reinforcement Learning Architecture Toward Autonomous Driving for On-‐‑ Ramp Merge, IEEE ITSC 2017

- CARLA, https://github.com/carla-‐‑simulator/carla

| principal investigators | researchers | themes |

|---|---|---|

| Ching-Yao Chan Stella Yu | Sascha Hornauer | Autonomous Driving, Machine Learning, System Identification, Adaptive Control |