Trust-Region Based Robustness of Neural Networks in the Face of Adversarial Attacks

ABOUT THE PROJECT

At a glance

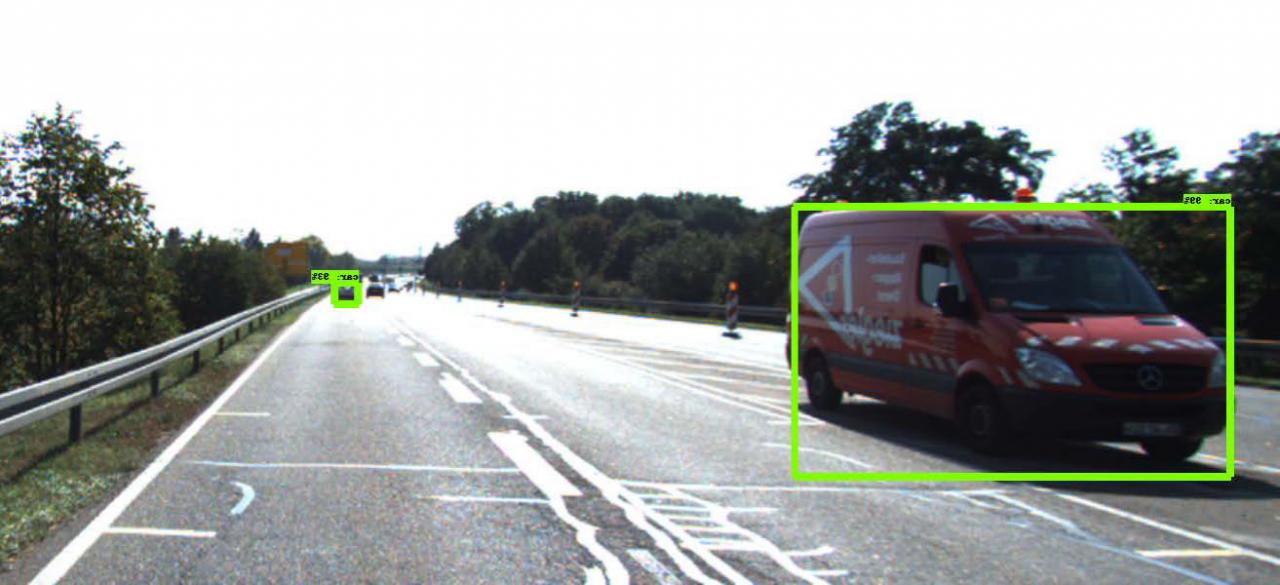

Deep Neural Networks are quite vulnerable to adversarial perturbations. Despite significant efforts in developing defense mechanisms, this is still a major problem in applications of neural networks. The importance of this issue for autonomous driving is critical, e.g., in that an adversary can alter a traffic sign to be misinterpreted by an autonomous agent. A promising line of research is to incorporate robustness during the training phase. However, this can add significant overhead to the computational cost of training. A naive solution would be to use crude approximations to measure robustness of the network to reduce this cost, but this approach has proved ineffective. In this study we aim to design a fast and scalable framework for robust training of neural networks. A very promising recent work is an in-house trust region based optimization method, which is up to $37\btimes$ faster than existing methods and yet more effective in terms of adversarial perturbation norm. The results have shown promising effects for various image classification problems. Our first goal is to extend this framework for object detection and segmentation problems, for which there is very little work on training based defense methods. Our second goal is to provide a systematic analysis of the trade-offs between model accuracy and robustness for these applications using the trust-region based approach.

| principal investigators | researchers | themes |

|---|---|---|

| Michael Mahoney | Amir Gholami | adversarial attack, adversarial training, Trust Region |