Uncertainty-Aware Meta-Learning and Safe Adaptation

ABOUT THE PROJECT

At a Glance

Autonomous systems that operate reliably in open-world settings, such as autonomous vehicles, must contend with previously unseen and unfamiliar circumstances, which present a major challenge: while current machine learning methods can generalize effectively to new situations that resemble those seen during training, unexpected changes in the environment, system dynamics, or surroundings may result in catastrophic failure. A grand challenge for current machine learning methods is to enable not only generalizable learning from huge datasets, but also efficient, fast, and reliable adaptation to changes in the task. A promising approach to enable this is meta-learning: algorithms that learn to learn, utilizing past experience to acquire fast learning procedures that can adapt to changes in the task setting quickly. However, meta-learning algorithms are not immune from the effects of distributional shift either, and standard meta-learning methods can adapt efficiently only to those settings that resemble the meta-training distribution. The goal of this project will be to develop meta-learning methods that incorporate explicit modeling of epistemic uncertainty to overcome this limitation. The desired result would be a meta-learning method that learns to estimate uncertainty for a new task, and adapt in an uncertainty-aware way. When the method encounters a situation that is far out of distribution, adaptation should increase the estimated uncertainty, such that the method is aware that the adapted model is a “best guess” under uncertain conditions, while familiar situations would result in low uncertainty adaptation.

An uncertainty-aware meta-learning algorithm would learn how to both estimate uncertainty and adapt. An example application might be an autonomous vehicle that uses past data, collected from different vehicles and many different situations, to adapt to changes in the vehicle itself (e.g., malfunctioning sensors, changing dynamics), as well as the surroundings (e.g., changing weather conditions, changing behavior of other vehicles). Upon experiencing unfamiliar or out-of-distribution events, the model -- typically representing a control policy or predictive model -- would adapt, and simultaneously estimate the posterior uncertainty over the adapted model. Adapting to highly unfamiliar conditions would produce larger uncertainty estimates, which could then be incorporated into risk-sensitive planning (i.e., pessimistic or conservative planning), or else used to alert a safety or abort system when the level of uncertainty exceeds some desired threshold. Our initial evaluation will be conducted on simulated benchmark tasks and environments, such as Carla.

We will develop our uncertainty-aware meta-learning algorithm on the basis of model-agnostic meta-learning (MAML) [1], a framework for meta-learning developed in our lab that can enable any deep neural network model to be turned into a meta-learned model by meta-training initial model parameters that can be adapted quickly with gradient descent. By extending MAML to accommodate Bayesian neural networks, which model a distribution over model parameters, we will incorporate epistemic uncertainty into the model. A critical challenge in devising an effective training algorithm for such a model will be to properly handle the change in uncertainty due to adaptation. While a conventional model should have lower uncertainty after adapting to unfamiliar model, our meta-trained models should have higher uncertainty when adapted to unfamiliar data, since the model should recognize that it has not learned how to learn from such data before. We plan to implement this process by deriving a relationship between the meta-learned parameters and a prior over the model weights. Updates that move the weights further from this prior should result in models with greater uncertainty. We will develop a complete theoretical framework for such uncertainty-aware model updates, building on our recent work on Bayesian meta-learning, which effectively combines meta-learning with variational Bayes, but without properly accounting for epistemic uncertainty for out-of-distribution tasks [2, 3]. We will evaluate our approach by instantiating it as uncertainty aware meta-reinforcement learning, as well as uncertainty aware meta-learning of predictive models. The meta-RL methods will be evaluated based on their ability to safely adapt meta-trained policies to changing dynamics and environments, and meta-learned predictive models will be evaluated based on how well they can be used to perform risk-sensitive planning after adaptation (e.g., an autonomous vehicle planning to avoid unfamiliar roadside construction after adapting the model to the out-of-distribution observations).

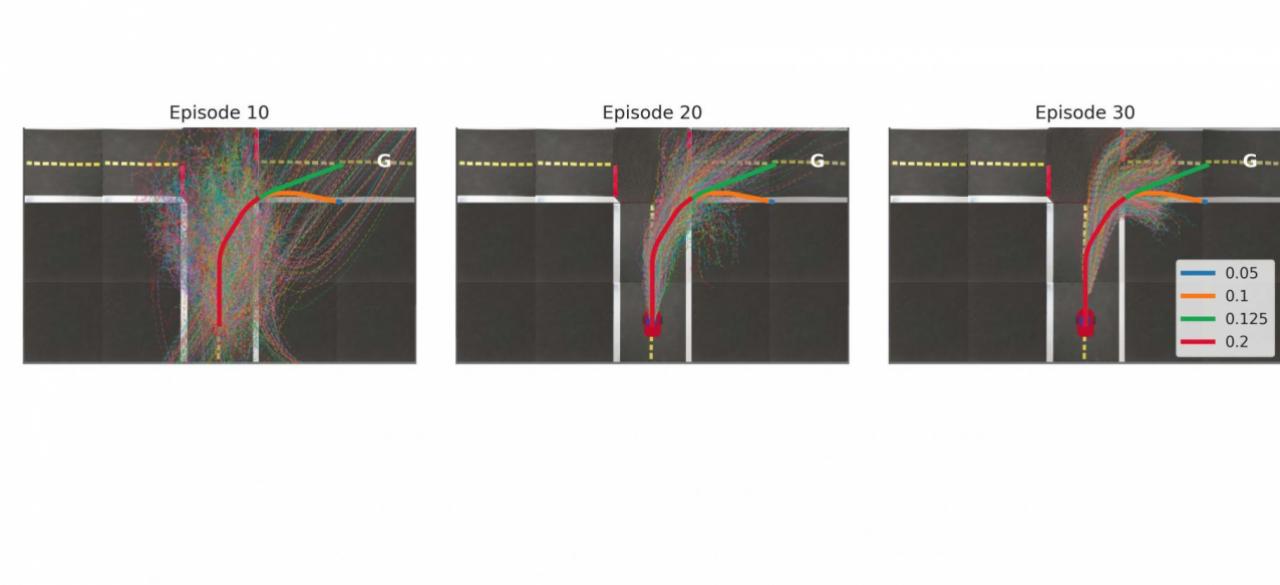

In our current research, we have investigated Bayesian methods for meta-learning outside of the setting of uncertainty-aware decision making [2, 3], and we have also studied uncertainty-aware decision making for model-based RL [4] and, more recently, for uncertainty-aware adaptation with risk-sensitive control without meta-learning, in a simple driving scenario based on the Duckie Town simulator [5] (see also figure on the right). These preliminary results suggest that uncertainty-aware meta-learning for safe adaptation should be feasible, though a number of major research challenges remain.

References. [1] Finn, Abbeel, Levine. Model-Agnostic Meta-Learning. ICML 2017. [2] Grant, Finn, Levine, Darrell, Griffiths. Recasting Gradient-Based Meta-Learning as Hierarchical Bayes. ICLR 2018. [3] Finn*, Xu*, Levine. Probabilistic Model-Agnostic Meta-Learning. NIPS 2018. [4] Chua, Calandra, McAllister, Levine. Deep Reinforcement Learning in a Handful of Trials. NIPS 2018. [5] Zhang, Cheung, Finn, Levine, Jayaraman. Hope For The Best but Prepare For The Worst. NIPS 2019 Deep RL Workshop.

| PRINCIPLE INVESTIGATOR | RESEARCHERS | THEMES |

|---|---|---|

| Sergey Levine | meta-learning, reinforcement learning, uncertainty estimation, robustness |