Using High-Level Structure and Context for Object Recognition

ABOUT THIS PROJECT

At a glance

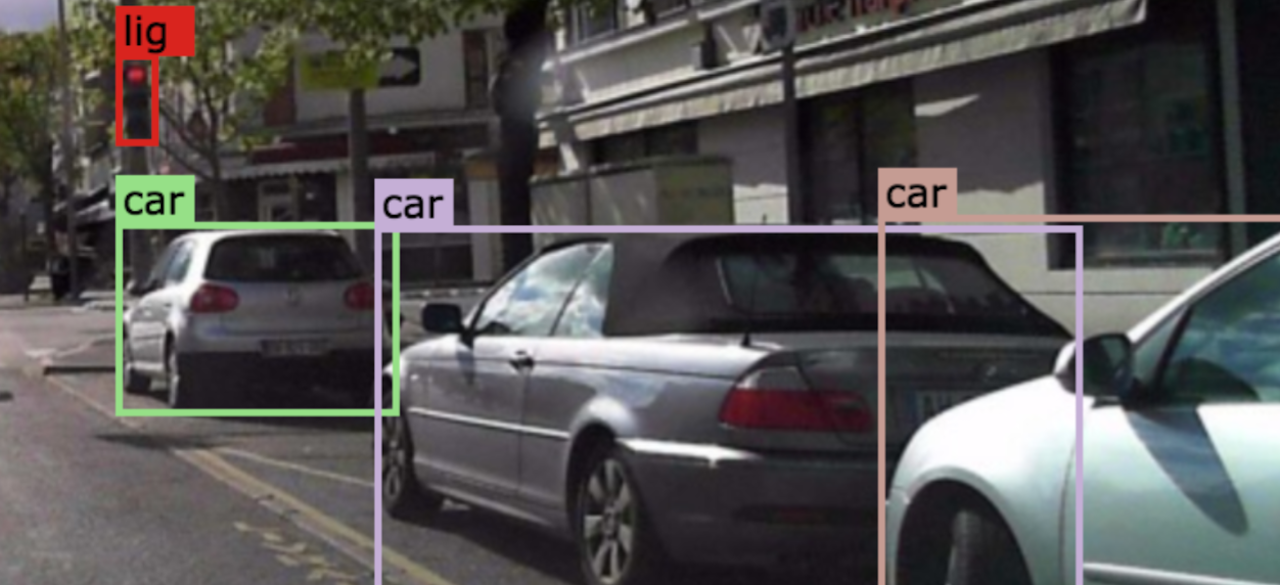

Many of the challenges faced by computer vision systems for ADAS/AV systems can be linked to the fact that state-of-the-art CV pipelines process one image at a time, or at best, treat a series of images in a video stream as an isolated collection. Further, current state-of-the-art methods focus on modeling individual objects within individual images: discarding the semantic and visual relationships present in the image, image collection, as well as in external data sources. As a result, this puts a lot of pressure on per-image and per-object based supervised learning algorithms, that in turn, require vast amounts of highly diverse, manually labeled data to produce acceptable results: a difficult and ever-changing demand for ADAS/AV systems.

Rather than continue to chase a growing repository of labeled driving-related dataset for the long-tail of data possibilities, we believe that the key to addressing these problems is by incorporating high-level structure and context into current state-of-the-art CV pipelines. In the deep learning era, this direction of research has fragmented into several disjoint subfields: visual grammars that learn structured representations of images [Bordes, 2017], scene graphs that learn pairwise relationships between objects [Johnson, 2015], CNNs with context-specific layers [Bell, 2016], and visual relationship associations [Hu, 2018]. As outlined below, our research, aims to both unify and apply these research directions to ADAS/AV systems, with the primary goals being improved object recognition, few-shot learning, and model interpretability.

| principal investigators | researchers | themes |

|---|---|---|

object recognition, few-shot learning, model interpretability, domain adaptation, domain randomization, self-training, unsupervised labeling |